Virtual server: Difference between revisions

imported>Christopher Arriola No edit summary |

imported>Meg Taylor No edit summary |

||

| (90 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

{{subpages}} | {{subpages}} | ||

{{TOC|right}} | {{TOC|right}} | ||

A '''virtual server''' is a [[virtual machine]] (VM) that is running on top of a physical server. The physical server containing the virtual server is commonly called the '''host''' whereas each virtual server contained in the host is referred to as a '''guest'''. | A '''virtual server''' is a [[virtual machine]] (VM) that is running on top of a physical server. The physical server containing the virtual server is commonly called the '''host''' whereas each virtual server contained in the host is referred to as a '''guest'''. A virtual server is created through [[hardware virtualization]]. | ||

Virtual servers are isolated in their own virtual environment allowing multiple instances to be hosted simultaneously on a single server. Guests on the server can run different applications or tasks, on different operating systems, independently from one another. To server users, this appears as if each virtual server is a unique physical device thus maximizing the resources and processing-power of a single physical server. | Virtual servers are isolated in their own virtual environment allowing multiple instances to be hosted simultaneously on a single server. Guests on the server can run different applications or tasks, on different [[operating systems]], independently from one another. From example, one virtual server can run as a chat server, another as an FTP server, and another as database server, all of which can run concurrently on a single physical machine. To ensure independence and consistency, the physical server utilizes a [[Hypervisor|hypervisor]] whose role is to monitor the states of each guest. To server users, this appears as if each virtual server is a unique physical device thus maximizing the resources and processing-power of a single physical server. | ||

==History== | |||

[[Virtualization]] was first introduced by [[IBM]] in the 1960’s specifically to maximize the computing resources of large [[mainframe]] computers. This was done by partitioning mainframe hardware into multiple [[virtual machines]]. At the time, a mainframe computer costs millions of US dollars or thousands of US dollars to rent per month, thus, virtualization became an essential technology to efficiently utilize mainframe computers. In addition, this allowed multiple users in businesses and universities to share a single mainframe computer wherein each user has his or her own isolated machine to operate on without affecting another user’s machine. | |||

===1960's: IBM System/360 Machines=== | |||

The concept of virtual machines started with [[IBM System/360]] mainframes running on CP-67 or [[CP/CMS]] time-sharing [[operating systems]]. CP/CMS was first developed by IBM’s Cambridge Scientific Center (CSC) which consists of a control program (CP), now widely known as a hypervisor, and a console/conversational monitor screen (CMS) which served as a user interface. Each user would have a single CMS and the CP created the virtual machine environment for each user. The introduction of the CP/CMS virtual machine concept allowed: | |||

* Isolation between each user on the machine<br> | |||

* Simulation of a full machine for each user on the machine | |||

===1970's: IBM System/370 Machines=== | |||

In 1972, IBM re-implemented CP/CMS as CP-370 and included it as their first virtual machine operating system on their VM/370 or Virtual Machine Facility/370 line of operating systems. At the time, full-support for virtual memory on the hardware side was also introduced on IBM System/370 machines which significantly improved the efficiency and utilization of virtual machine use. | |||

===1980's - 1990's: x86 Architecture=== | |||

X86 architecture first appeared in 1978 when Intel released its 8086 CPU. Upon the onset of x86 processors, the usage of mainframe computers declined since client-server applications, along with Windows and Linux operating systems, made server computing significantly inexpensive. This as well changed the entire IT infrastructure from having large mainframe computers to instead having multiple single-purposed servers. This model, however, presented additional problems:<ref name="vmware">VMWare, Inc. http://www.vmware.com/ Retrieved 08-07-2010</ref> | |||

*''Low Infrastructure Utilization'' – Since servers were single-purposed (i.e. database server, FTP server, web server, etc.), they weren’t being fully utilized as opposed to their counter-parts – the mainframe computer – whose utilization was maximized because of virtualization. An estimate shows that only 10%-15% of its computing resources were being used. | |||

*''Increasing Physical Infrastructure Cost'' – Since organizations needed multiple applications, this meant that they would need a single physical server for each application or task. As the amount of servers increased, the amount of physical space needed to store and manage the servers also increased as well as the energy required to cool and power all of the servers. | |||

*''Lack of Disaster Protection'' – Because of the above issues and associated costs, disaster (i.e. natural causes, hardware corruption, server downtime, etc.) protection wasn’t even addressed. | |||

*''High IT Management Cost'' – Highly trained IT professionals were needed to maintain these systems especially within organizations with large IT sections. This again contributed to the costs necessary to manage these infrastructures. | |||

===1999: VMWare Introduces Virtualization on x86 Sytems=== | |||

Because of the aforementioned problems, virtualization was revisited as a possible solution by some organizations and in 1999, VMWare introduced virtualization on x86 systems. Because x86 systems had absolutely no support for virtualization, this innovation baffled many experts since the virtualization on these systems was deemed impossible. Ever since this introduction, server utilization significantly increased from ~10%-15% to ~60%-80%, in addition, the physical need for storage and maintenance was reduced 5-15 times. Despite these improvements, there was still significant overhead on the hypervisor since there was no hardware assistance. | |||

===2005-2006 Intel and AMD provide hardware support=== | |||

Between 2005 and 2006, [[Intel]] and [[AMD]] both came out with their own hardware solutions to alleviate some of the overhead imposed on the hypervisor by supporting virtualization. Intel’s set of processors that support virtualization are in the [[Intel VT-x]] set while AMD’s support is in the [[AMD-V]] set. | |||

==Technology== | |||

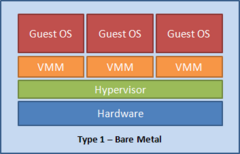

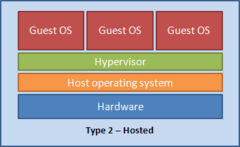

Virtual servers are computers which run in a virtualized environment knowingly or unknowingly. Virtualized environment is created by abstracting hardware level from the operating systems (OS). This level of abstraction occurs by implementing a thin layer of software called hypervisor which sits between the physical hardware and the operating system running on top of it. | |||

Operating systems that runs in a virtualized environment is called Guest OS and the OS which provides the bases of the virtualized environment is called Host OS. | |||

{{Image|Type1.PNG|right|240px|Hypervisor - Type 1}} | |||

{{Image|Type2.PNG|right|240px|Hypervisor - Type 2}} | |||

===Hypervisor=== | |||

Hypervisor also known as Virtual Machine Monitor (VMM) is a software which provides a thin layer of abstracted hardware to the targeted Guest OS. Guest OSs running on top of hypervisor "sees" virtual hardware provided by the hypervisor. Depending on the type of hypervisor and the Guest OS in use, the Guest OS might or might be aware it's running in a virtualized environment. <ref name="vmm">Rosenblum/Garfinkel. "Virtual Machine Monitors: Current Tech and Future Trends." May 2005. Accessed: http://www.stanford.edu/~stinson/paper_notes/virt_machs/vmms.txt.</ref> | |||

'''Two types of hypervisors.''' <ref name="xenhypervisor">"How are Hypervisor Classified." Accessed: http://www.xen.org/files/Marketing/HypervisorTypeComparison.pdf.</ref> | |||

*''Type 1'' - Bare metal: Hypervisors which runs directly on top of the hardware. Type 1 hypervisors are usually built on top of micro-kernel which controls both the hardware and the Guest OS. Examples are ''Xen Hypervisor, VMware GSX, Microsoft Hyper-V.''<ref name="vmm" /> | |||

*''Type 2'' - Hosted: Hypervisors running on top of a Host OS. Host OS controls the hardware and the hypervisor layer sits on top of the hosted OS which the Guest OS running as a process within the Host OS. Examples are ''Virtual PC, VMware Workstation, VirtualBox, Parallels Desktop for Mac.''<ref name="vmm" /> | |||

===Virtualization Categories=== | |||

There are currently three virtualization techniques, each with their advantages and disadvantages. | |||

====Full virtualization==== | |||

Full virtualization is an environment where the hypervisor emulates all underlying hardware components. This includes CPU management, memory management, I/O processes and peripherals. In x86 processor environment, full virtualization is accomplished by using software emulation called [[binary translation]]. Binary translation works by trapping special sets of CPU instructions which are not virtualizable then emulates them into special set of instructions which can be virtualized. Binary Translation occurs in real-time and set of instructions are cached for boosting performances. Currently in the x86 domain, VMware champions the way of binary translation and offers multiple platforms for full virtualization environments.<ref name="vmm" /><ref name="mskb">"Intel Privileged and Sensitive Instructions." February 1, 2002 . Accessed: http://support.microsoft.com/kb/114473.</ref> | |||

====Paravirtualization==== | |||

Paravirtualization involves modifying the OS kernel of the Guest OS to make the Guest OS "hypervisor aware". This technique allows the Guest OS to make non-virtualizable instructions with special hypercall instructions which communications directly with the CPU. Paravirtualization has been around since the inception of virtual servers, in the current x86 domain, Xen Hypervisor, by open source XenSource (now own by Citrix) is the dominant player.<ref name="vmm" /><ref name="whatisxen">"What is Xen." Accessed: http://www.xen.org/files/Marketing/WhatisXen.pdf.</ref> | |||

====Hardware Assisted==== | |||

Hardware assisted virtualization revolves around the concept of having a implicit processor and other hardware components in assisting virtualization process. In the case of x86 platforms, Intel and AMD both have released hardware virtualization in 2005. Intel's version is called Intel VT, AMD with AMD-V. Both technologies employee special set of instructions on the processor which the hypervisors can take advantage of by offload costly processor emulation instructions. At this current time, hardware virtualization is still early in development and does not yet outperform the software only variations. However the future of virtualization will emphasize greater importances in hardware virtualization and Intel and AMD both are committed and continue to improve the ways hardware can assist in virtualization.<ref name="vmm" /><ref name="vmworld">Lo, Jack. "VMware and Hardware Assist Technology (Intel VT and AMD-V)." 2006. Accessed: http://download3.vmware.com/vmworld/2006/tac9463.pdf.</ref> | |||

{| class="wikitable" | |||

|- | |||

! | |||

! Advantages | |||

! Disadvantages | |||

|- | |||

! Full virtualization | |||

| | |||

* Pure software implementation, the Guest OS does not need to be "hypervisor" aware. | |||

* Guest OS does not have to be modified in order by be supported. | |||

* Any x86 architecture OSs, including Windows 2000, XP, Linux can be virtualized. | |||

* Greater portability due to identical underlying "hardware" | |||

* Can be easily migrated between physical machines. | |||

| | |||

* There are 17 non-virtualizable CPU instructions which the software will have to emulate. <ref name="mskb" /> | |||

* These instructions are costly and slows down the Guest OS's performance | |||

* Greater memory requirements | |||

|- | |||

! Paravirtualization | |||

| | |||

* No CPU emulation overhead. | |||

* Greater integration with the hardware | |||

* Performance gain can be tuned by further by optimizing the Guest OS. | |||

| | |||

* Guest OS must be modified to be "hypervisor aware". | |||

* Modification is required at the kernel and other "deep" parts of the OS. | |||

* Not possible to support unmodifiable OSs such as Windows 2000, XP. | |||

* Due to greater ties wit the underlying hardware, the Guest OSs are not as portable. | |||

* Underlying hardware specifications are not as broad. | |||

|- | |||

!Hardware Assisted | |||

| | |||

* Modifications to the Guest OSs are not necessary. | |||

* Eliminate unnecessary software emulation within the hypervisor | |||

* Reduce memory requirements | |||

* Overall greater performance and compatibility | |||

| | |||

* Current generation of hardware assisted hypervisors are not faster than the software only approach. | |||

* Underlying hardware must support the hardware virtualization when migrating between machines. | |||

* Intel-VT and AMD-V are not directly compatible with each other | |||

|} | |||

====Intel-VT==== | |||

Intel's version of hardware virtualization known as Intel-VT comprises of three separate modules. | |||

Processor component is called Intel VT-x for Xeon processors and VT-i for Itanium processors. | |||

Intel also has a virtualization hardware supported in their chipset called VT-d. | |||

VT-d handles the I/Os from the OSs and enables the hypervisors to offload I/O tasks to the chipset allowing greater performances. | |||

Third module is called VT-c which handles the network traffic. With VT-c enabled network card, the chipset in the network card can handle the network traffic load between virtualized machines.<ref name="usenix">Robin, John Scott "Analysis of the Intel Pentium's Ability to Support a Secure Virtual Machine Monitor." 2000. Accessed: http://www.usenix.org/events/sec2000/full_papers/robin/robin_html/index.html.</ref><ref name="intelvt">"A Superior Hardware Platform for Server Virtualization." Accessed: http://download.intel.com/technology/virtualization/320426.pdf .</ref> | |||

====AMD-V==== | |||

AMD with its own hardware virtualization is packaged under the product name AMD-V. AMD-V enables similar advantages of Intel's Intel-VT, providing separate additional virtualization instructions that can be used by the hypervisors to eliminate costly instruction emulations. Addition to CPU instructions, AMD-V like Intel-VT improves memory management allowing the hardware to perform the address look up rather than having it done within the software thus improving performance. Lastly, AMD-V also includes special I/O instructions to improve I/O throughput by offloading I/O instructions to the hardware.<ref name="vmworld"/> | |||

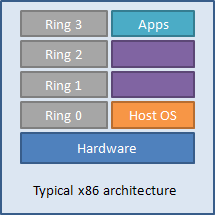

{{Image|X86 typical architecture.png|right|240px|Typical x86 architecture in privilege mode}} | |||

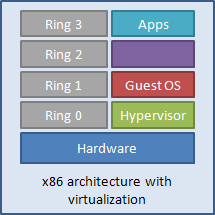

{{Image|X86 virtualized.png|right|240px|x86 architecture in virtualized environment}} | |||

===x86 virtualization challenges=== | |||

In an x86 operating system environment, the OS is designed to run directly on top of the hardware and the user applications interfaces with the hardware through the OS. In the model of trust computing base this hierarchy is normally represented with four levels of privilege known as TCB Rings. With the four ring levels: 0, 1, 2, 3, the base OS is expected to run in ring 0 where it has the highest privileges and can directly communication with the hardware. User applications typically run in Ring 3 where it is least privileged and confined. In the model of virtualization, because the hypervisor simulates the hardware and sits below the base OS, the hypervisor takes the place of ring 0 thus pushing the Guest OS system to higher level. This posses great challenges to the OS expecting to run at ring 0 and realizing it's located in a higher level. VMware was first to overcome this difficulty by using a technique binary translation explained above.<ref name="pcarch">Cox, Russ. "PC Architecture from inside the CPU." 2002. Accessed: http://swtch.com/~rsc/talks/pcarch.pdf.</ref> | |||

===Memory Virtualization=== | |||

When creating a virtualized environment, one must additional to CPU virtualization, virtualize memory management. In the cases of full virtualization where the Guest OSs are not "hypervisor aware", the OS expects the memory space to start from 0x0000, however because the hypervisor handles the transition between the OS and the hardware, the Guest OS will not have access to the memory space it desires. To handle multiple virtual machines within a single physical memory space, hypervisors use what's known as shadow page table which contains a direct translation between virtual machine's memory table to the physical memory table. Due to this reason, you cannot allocate memory to the virtual machine greater that of the physical memory space. While employing shadow page table allows seamless translation between the virtual memory space and the physical memory space, the translation takes toll on the processor and creates overheads. Second generation of hardware virtualization techniques help facilitate this process by offloading the second memory address look up from the software to the hardware. <ref name="vmesx">Waldspurger, Carl A. "Memory Resource Management in VMware ESX Server." Dec 2002. Accessed: http://waldspurger.org/carl/papers/esx-mem-osdi02.pdf .</ref> | |||

'''Hardware Assisted'''<br /> | |||

Using hardware assisted memory management unit, hypervisors can focus on other major tasks such as communications from the Guest OSs to the physical hardware and less about memory management, thus increase in performance. In the case of Intel-VT, Intel calls this technique Extended Page Table (EPT). EPT allows direct translation of the Guest OS's page tables to EPT bypassing the necessary translation done by the hypervisor. The same process is called Nested Page Table (NPT) using AMD's implementation. | |||

===Future=== | |||

Continual development of virtualization from software and hardware companies will enable more efficient, better utilized and faster virtual servers. Commitments from hardware companies such as Intel, AMD and backed by software companies such as Microsoft, VMware, Citrix, Oracle will further drive the innovations and greater deployments of virtual servers. | |||

===Hosting Service Providers=== | |||

One area of today's computing sector which leverages heavily on virtualization is hosting service providers. Hosting service providers provides abilities for the end users to create and upload web applications to their servers. Typical hosting service provider employs hundreds to thousands of servers located in an environmentally controlled data center and sells portions of the servers to the customers. In the pre-virtualization era, a single server was setup for a single customer, enabling the customer to login and upload files to the server to serve. This model transitioned to setting up separate web directories for each customers and utilize a single server for multiple customers. However this posed a problem where customers needed to install their own custom applications on the server or needing to run a different version of web service application different from what's currently installed. Because of these challenges, virtual private servers were quickly adapted in hosting service sector. Virtual private servers enabled each customers to run their own full servers, install their own programs and not having to worry about shared tenents and custom configurations. Most large hosting service providers offer virtual private server as one of their packages and enables the end users to either install Linux or Windows to run their products. | |||

====Cloud Computing==== | |||

[[Cloud computing]] is recent phenomenon, a buzz term, which addition to virtual private server allows the end user to shrink or expand the resources depending on the work load. In the typical virtual server environment, a single physical server is segmented into multiple virtual servers, however in cloud computing environment, the reverse is also possible by combining multiple virtual server's resources into a single logical server. This is truly is an emerging area with Amazon and Google spearheading the effort of utilizing their well established data warehouses to offer the customers the security and the reliabilities of their data centers. Unlike the traditional web hosting model where one pays monthly fees to the hosting company, in cloud computing model, the fees are usually charged by an hour. One would only pay per computing cycle used, much like utilities from the grid. | |||

==Economics== | |||

One of the main driving forces behind the push for virtualization in industry is the cost saving potential that can occur from multiple servers on one machine as opposed to the need for separate servers for each instance of an operating system. Not only does one save on the physical hardware costs involved in the purchase of multiple servers, the cost of maintaining the hardware decreases as fewer servers are needed that would otherwise require extra physical maintenance, climate control, and space. Fewer machines also equates to lower energy costs. | |||

===Virtual Server Market Overview=== | |||

There is a lot of potential for growth in the virtual server market. In a recent survey, two-thirds of the companies surveyed ran less than half of their applications on virtual servers and over half of the companies surveyed have even begun to virtualize their desktop. VMware estimates that the number of enterprises beginning to adopt their x86 virtualization has increased by 25% each year.<ref name="woodward">Woodward, Kirk. "Virtual Server Market Trends." October, 2009. Accessed: http://www.faulkner.com/products/faulknerlibrary/default.htm.</ref> These figures alone show that not only is there potential for growth in the market, there is also desire for enterprises to begin using these virtual servers. Financial estimates put this market's value at over 19 billion dollars.<ref name="furness">Furness, Victoria. "The Future of Virtualization. Emerging trends and the evolving vendor landscape." Business Insights, October 2009. Accessed: http://www.bi-interactive.com/index.aspx</ref> | |||

====Why Virtual?==== | |||

What's the reason for all this growth in the virtual server industry? In a word...money. In moving to and utilizing virtual servers, Business Insights reports that there are several places that costs could be cut:<ref name="furness" /> | |||

* Physical machine costs - For an enterprise running 250 dual-core servers could save over three million dollars over a three year period. Additionally, power saving costs close to $125,000 per 1,000 PCs per year could be realized by moving to a server-hosted desktop virtualization solution. | |||

* Power costs - Although the move to virtual servers often requires the purchase of new hardware, over the long term IBM research states that the server space could be reduced by up to 90%. Fewer servers equates to less money being spent on power and cooling. | |||

* IT department costs - Beyond the physical costs of purchasing and powering physical servers, the largest cost associated—70%--to an IT department is labor. Although running virtual servers would demand a more specialized IT crew, it would also require a smaller staff which is critical for companies looking to reduce labor costs. | |||

* Line of Business Users - Since the virtual server farm is smaller and easier to maintain, the availability of services is more likely to be guaranteed. Support could be provided quicker thus increasing the productivity of these LOB users. | |||

====Corporate Landscape==== | |||

The current corporate market for creating virtual servers is volatile and involves a few main players. <ref name="woodward" /> | |||

'''Major Players''' | |||

*''VMware'' - The current leader in the virtual server market. In 2007 VMware represented over half of the market for x86 servers and over 80% of large corporate enterprises. Their main products are: | |||

** VMware Infrastrucre - Used to create and manage virtual server environments | |||

** VMware Virtual Center - Used for monitoring | |||

** VMware Lab Manager - Used to create test environments | |||

** VMware Server - Server partitioner | |||

** VMware Converter - Used to convert physical servers to virtual servers | |||

*''Microsoft'' - Microsoft first tried to enter the virtual server market in 2002 but was relatively unsuccessful because purchasers were uneasy about the alternate OS on Microsoft's offering. In 2006 Microsoft launched Virtual Server Manager touting that it could do everything VMware's virtual servers could. Though a bit of a reach, Microsoft did release a free virtual server that supported a Linux based OS which worked to calm the uneasiness felt in 2002. Its main product is: | |||

** Microsoft Windows Server Hyper-V - Not quite as robust as VMware, but it is a direct challenge. | |||

*''Citrix / XenSource'' - Citrix is the leader in the desktop virtualization market with 19% market share. In 2007 Citrix purchased XenSource and is focused on virtualization processes using entirely open source coding. Its main products are: | |||

** XenExpress - Virtualization starter package | |||

** XenServer - Virtualization platform for Windows and Linux servers | |||

** XenEnterprise - Enterprise-wide virtual management tool | |||

These three companies make up the vast majority of the virtual server market and have such a lead that the market landscape should not drastically change in the next several years. | |||

====Challenges to Growth==== | |||

There are several challenges this industry faces if it is to continue its fast growth: <ref name="greiner">Greiner, Lynn. "Virtual Computing Overview." April, 2010. Accessed: http://www.faulkner.com/products/faulknerlibrary/default.htm.</ref> | |||

# ''Security'' - According to one report, 60% of virtual servers are less secure then the physical machines they replaced. With advances in technologies, that number is sure to decline and is estimated that it should drop to 30%. However, for many enterprises with especially sensitive data and processes it needs to protect, that level of security may be a limiting factor to switching to virtual servers despite the cost advantages in doing so. | |||

# ''Licensing'' - This is a challenging situation where the software vendor is still working under the physical server model when the hardware has evolved to virtual servers. In the physical server model an enterprise would need to purchase one license for each machine that needed the piece of software. However in the virtual server world, each machine might have dozens of instances of the same piece of software running on it requiring dozens of licenses to be purchased. This immediately eats away at the money saved from using fewer physical servers. <br/> It appears, however, that software manufactures are starting to change their pricing structure. Microsoft, for example, will allow that its dual core machines will only need one license for their products and that certain customers that have purchased specific versions of the Windows operating system will be able to run up to four copies of that OS on the desktop. However, for large scale enterprises this is a hefty cost that makes the cost/benefit analysis for upgrading to virtual servers difficult to run. | |||

# ''Software'' - In addition to licensing concerns with virtual software, there is the fact that not all software is able to be virtualized for various technical reasons. Internet Explorer, for instance, is extremely difficult to virtualize. As enterprises begin to use more and more virtual servers, balancing applications that can be run virtually and those that cannot is a challenge. | |||

# ''IT Technicians'' - The change to virtual servers requires the IT staff that maintains them to be much more skilled and knowledgeable. Not only is that individual responsible for the hardware that he or she was responsible for before, they must also have the necessary skill set to be able to service a wider array of software that might be found on a virtual machine. For example, one host server might be running instances of Windows and Linux. In the past, that would require at least two servers each with potentially their own technician. As it is not feasible to hire a person for each instance of a different operating system, the enterprise must hire more skilled IT staff. | |||

Although these are all difficult challenges to consider, the analysis of the market conditions suggests that these are not conditions that act as a barrier to entry. | |||

==References== | |||

{{reflist|2}} | |||

Revision as of 23:59, 7 October 2013

A virtual server is a virtual machine (VM) that is running on top of a physical server. The physical server containing the virtual server is commonly called the host whereas each virtual server contained in the host is referred to as a guest. A virtual server is created through hardware virtualization.

Virtual servers are isolated in their own virtual environment allowing multiple instances to be hosted simultaneously on a single server. Guests on the server can run different applications or tasks, on different operating systems, independently from one another. From example, one virtual server can run as a chat server, another as an FTP server, and another as database server, all of which can run concurrently on a single physical machine. To ensure independence and consistency, the physical server utilizes a hypervisor whose role is to monitor the states of each guest. To server users, this appears as if each virtual server is a unique physical device thus maximizing the resources and processing-power of a single physical server.

History

Virtualization was first introduced by IBM in the 1960’s specifically to maximize the computing resources of large mainframe computers. This was done by partitioning mainframe hardware into multiple virtual machines. At the time, a mainframe computer costs millions of US dollars or thousands of US dollars to rent per month, thus, virtualization became an essential technology to efficiently utilize mainframe computers. In addition, this allowed multiple users in businesses and universities to share a single mainframe computer wherein each user has his or her own isolated machine to operate on without affecting another user’s machine.

1960's: IBM System/360 Machines

The concept of virtual machines started with IBM System/360 mainframes running on CP-67 or CP/CMS time-sharing operating systems. CP/CMS was first developed by IBM’s Cambridge Scientific Center (CSC) which consists of a control program (CP), now widely known as a hypervisor, and a console/conversational monitor screen (CMS) which served as a user interface. Each user would have a single CMS and the CP created the virtual machine environment for each user. The introduction of the CP/CMS virtual machine concept allowed:

- Isolation between each user on the machine

- Simulation of a full machine for each user on the machine

1970's: IBM System/370 Machines

In 1972, IBM re-implemented CP/CMS as CP-370 and included it as their first virtual machine operating system on their VM/370 or Virtual Machine Facility/370 line of operating systems. At the time, full-support for virtual memory on the hardware side was also introduced on IBM System/370 machines which significantly improved the efficiency and utilization of virtual machine use.

1980's - 1990's: x86 Architecture

X86 architecture first appeared in 1978 when Intel released its 8086 CPU. Upon the onset of x86 processors, the usage of mainframe computers declined since client-server applications, along with Windows and Linux operating systems, made server computing significantly inexpensive. This as well changed the entire IT infrastructure from having large mainframe computers to instead having multiple single-purposed servers. This model, however, presented additional problems:[1]

- Low Infrastructure Utilization – Since servers were single-purposed (i.e. database server, FTP server, web server, etc.), they weren’t being fully utilized as opposed to their counter-parts – the mainframe computer – whose utilization was maximized because of virtualization. An estimate shows that only 10%-15% of its computing resources were being used.

- Increasing Physical Infrastructure Cost – Since organizations needed multiple applications, this meant that they would need a single physical server for each application or task. As the amount of servers increased, the amount of physical space needed to store and manage the servers also increased as well as the energy required to cool and power all of the servers.

- Lack of Disaster Protection – Because of the above issues and associated costs, disaster (i.e. natural causes, hardware corruption, server downtime, etc.) protection wasn’t even addressed.

- High IT Management Cost – Highly trained IT professionals were needed to maintain these systems especially within organizations with large IT sections. This again contributed to the costs necessary to manage these infrastructures.

1999: VMWare Introduces Virtualization on x86 Sytems

Because of the aforementioned problems, virtualization was revisited as a possible solution by some organizations and in 1999, VMWare introduced virtualization on x86 systems. Because x86 systems had absolutely no support for virtualization, this innovation baffled many experts since the virtualization on these systems was deemed impossible. Ever since this introduction, server utilization significantly increased from ~10%-15% to ~60%-80%, in addition, the physical need for storage and maintenance was reduced 5-15 times. Despite these improvements, there was still significant overhead on the hypervisor since there was no hardware assistance.

2005-2006 Intel and AMD provide hardware support

Between 2005 and 2006, Intel and AMD both came out with their own hardware solutions to alleviate some of the overhead imposed on the hypervisor by supporting virtualization. Intel’s set of processors that support virtualization are in the Intel VT-x set while AMD’s support is in the AMD-V set.

Technology

Virtual servers are computers which run in a virtualized environment knowingly or unknowingly. Virtualized environment is created by abstracting hardware level from the operating systems (OS). This level of abstraction occurs by implementing a thin layer of software called hypervisor which sits between the physical hardware and the operating system running on top of it.

Operating systems that runs in a virtualized environment is called Guest OS and the OS which provides the bases of the virtualized environment is called Host OS.

Hypervisor

Hypervisor also known as Virtual Machine Monitor (VMM) is a software which provides a thin layer of abstracted hardware to the targeted Guest OS. Guest OSs running on top of hypervisor "sees" virtual hardware provided by the hypervisor. Depending on the type of hypervisor and the Guest OS in use, the Guest OS might or might be aware it's running in a virtualized environment. [2]

Two types of hypervisors. [3]

- Type 1 - Bare metal: Hypervisors which runs directly on top of the hardware. Type 1 hypervisors are usually built on top of micro-kernel which controls both the hardware and the Guest OS. Examples are Xen Hypervisor, VMware GSX, Microsoft Hyper-V.[2]

- Type 2 - Hosted: Hypervisors running on top of a Host OS. Host OS controls the hardware and the hypervisor layer sits on top of the hosted OS which the Guest OS running as a process within the Host OS. Examples are Virtual PC, VMware Workstation, VirtualBox, Parallels Desktop for Mac.[2]

Virtualization Categories

There are currently three virtualization techniques, each with their advantages and disadvantages.

Full virtualization

Full virtualization is an environment where the hypervisor emulates all underlying hardware components. This includes CPU management, memory management, I/O processes and peripherals. In x86 processor environment, full virtualization is accomplished by using software emulation called binary translation. Binary translation works by trapping special sets of CPU instructions which are not virtualizable then emulates them into special set of instructions which can be virtualized. Binary Translation occurs in real-time and set of instructions are cached for boosting performances. Currently in the x86 domain, VMware champions the way of binary translation and offers multiple platforms for full virtualization environments.[2][4]

Paravirtualization

Paravirtualization involves modifying the OS kernel of the Guest OS to make the Guest OS "hypervisor aware". This technique allows the Guest OS to make non-virtualizable instructions with special hypercall instructions which communications directly with the CPU. Paravirtualization has been around since the inception of virtual servers, in the current x86 domain, Xen Hypervisor, by open source XenSource (now own by Citrix) is the dominant player.[2][5]

Hardware Assisted

Hardware assisted virtualization revolves around the concept of having a implicit processor and other hardware components in assisting virtualization process. In the case of x86 platforms, Intel and AMD both have released hardware virtualization in 2005. Intel's version is called Intel VT, AMD with AMD-V. Both technologies employee special set of instructions on the processor which the hypervisors can take advantage of by offload costly processor emulation instructions. At this current time, hardware virtualization is still early in development and does not yet outperform the software only variations. However the future of virtualization will emphasize greater importances in hardware virtualization and Intel and AMD both are committed and continue to improve the ways hardware can assist in virtualization.[2][6]

| Advantages | Disadvantages | |

|---|---|---|

| Full virtualization |

|

|

| Paravirtualization |

|

|

| Hardware Assisted |

|

|

Intel-VT

Intel's version of hardware virtualization known as Intel-VT comprises of three separate modules. Processor component is called Intel VT-x for Xeon processors and VT-i for Itanium processors. Intel also has a virtualization hardware supported in their chipset called VT-d. VT-d handles the I/Os from the OSs and enables the hypervisors to offload I/O tasks to the chipset allowing greater performances. Third module is called VT-c which handles the network traffic. With VT-c enabled network card, the chipset in the network card can handle the network traffic load between virtualized machines.[7][8]

AMD-V

AMD with its own hardware virtualization is packaged under the product name AMD-V. AMD-V enables similar advantages of Intel's Intel-VT, providing separate additional virtualization instructions that can be used by the hypervisors to eliminate costly instruction emulations. Addition to CPU instructions, AMD-V like Intel-VT improves memory management allowing the hardware to perform the address look up rather than having it done within the software thus improving performance. Lastly, AMD-V also includes special I/O instructions to improve I/O throughput by offloading I/O instructions to the hardware.[6]

x86 virtualization challenges

In an x86 operating system environment, the OS is designed to run directly on top of the hardware and the user applications interfaces with the hardware through the OS. In the model of trust computing base this hierarchy is normally represented with four levels of privilege known as TCB Rings. With the four ring levels: 0, 1, 2, 3, the base OS is expected to run in ring 0 where it has the highest privileges and can directly communication with the hardware. User applications typically run in Ring 3 where it is least privileged and confined. In the model of virtualization, because the hypervisor simulates the hardware and sits below the base OS, the hypervisor takes the place of ring 0 thus pushing the Guest OS system to higher level. This posses great challenges to the OS expecting to run at ring 0 and realizing it's located in a higher level. VMware was first to overcome this difficulty by using a technique binary translation explained above.[9]

Memory Virtualization

When creating a virtualized environment, one must additional to CPU virtualization, virtualize memory management. In the cases of full virtualization where the Guest OSs are not "hypervisor aware", the OS expects the memory space to start from 0x0000, however because the hypervisor handles the transition between the OS and the hardware, the Guest OS will not have access to the memory space it desires. To handle multiple virtual machines within a single physical memory space, hypervisors use what's known as shadow page table which contains a direct translation between virtual machine's memory table to the physical memory table. Due to this reason, you cannot allocate memory to the virtual machine greater that of the physical memory space. While employing shadow page table allows seamless translation between the virtual memory space and the physical memory space, the translation takes toll on the processor and creates overheads. Second generation of hardware virtualization techniques help facilitate this process by offloading the second memory address look up from the software to the hardware. [10]

Hardware Assisted

Using hardware assisted memory management unit, hypervisors can focus on other major tasks such as communications from the Guest OSs to the physical hardware and less about memory management, thus increase in performance. In the case of Intel-VT, Intel calls this technique Extended Page Table (EPT). EPT allows direct translation of the Guest OS's page tables to EPT bypassing the necessary translation done by the hypervisor. The same process is called Nested Page Table (NPT) using AMD's implementation.

Future

Continual development of virtualization from software and hardware companies will enable more efficient, better utilized and faster virtual servers. Commitments from hardware companies such as Intel, AMD and backed by software companies such as Microsoft, VMware, Citrix, Oracle will further drive the innovations and greater deployments of virtual servers.

Hosting Service Providers

One area of today's computing sector which leverages heavily on virtualization is hosting service providers. Hosting service providers provides abilities for the end users to create and upload web applications to their servers. Typical hosting service provider employs hundreds to thousands of servers located in an environmentally controlled data center and sells portions of the servers to the customers. In the pre-virtualization era, a single server was setup for a single customer, enabling the customer to login and upload files to the server to serve. This model transitioned to setting up separate web directories for each customers and utilize a single server for multiple customers. However this posed a problem where customers needed to install their own custom applications on the server or needing to run a different version of web service application different from what's currently installed. Because of these challenges, virtual private servers were quickly adapted in hosting service sector. Virtual private servers enabled each customers to run their own full servers, install their own programs and not having to worry about shared tenents and custom configurations. Most large hosting service providers offer virtual private server as one of their packages and enables the end users to either install Linux or Windows to run their products.

Cloud Computing

Cloud computing is recent phenomenon, a buzz term, which addition to virtual private server allows the end user to shrink or expand the resources depending on the work load. In the typical virtual server environment, a single physical server is segmented into multiple virtual servers, however in cloud computing environment, the reverse is also possible by combining multiple virtual server's resources into a single logical server. This is truly is an emerging area with Amazon and Google spearheading the effort of utilizing their well established data warehouses to offer the customers the security and the reliabilities of their data centers. Unlike the traditional web hosting model where one pays monthly fees to the hosting company, in cloud computing model, the fees are usually charged by an hour. One would only pay per computing cycle used, much like utilities from the grid.

Economics

One of the main driving forces behind the push for virtualization in industry is the cost saving potential that can occur from multiple servers on one machine as opposed to the need for separate servers for each instance of an operating system. Not only does one save on the physical hardware costs involved in the purchase of multiple servers, the cost of maintaining the hardware decreases as fewer servers are needed that would otherwise require extra physical maintenance, climate control, and space. Fewer machines also equates to lower energy costs.

Virtual Server Market Overview

There is a lot of potential for growth in the virtual server market. In a recent survey, two-thirds of the companies surveyed ran less than half of their applications on virtual servers and over half of the companies surveyed have even begun to virtualize their desktop. VMware estimates that the number of enterprises beginning to adopt their x86 virtualization has increased by 25% each year.[11] These figures alone show that not only is there potential for growth in the market, there is also desire for enterprises to begin using these virtual servers. Financial estimates put this market's value at over 19 billion dollars.[12]

Why Virtual?

What's the reason for all this growth in the virtual server industry? In a word...money. In moving to and utilizing virtual servers, Business Insights reports that there are several places that costs could be cut:[12]

- Physical machine costs - For an enterprise running 250 dual-core servers could save over three million dollars over a three year period. Additionally, power saving costs close to $125,000 per 1,000 PCs per year could be realized by moving to a server-hosted desktop virtualization solution.

- Power costs - Although the move to virtual servers often requires the purchase of new hardware, over the long term IBM research states that the server space could be reduced by up to 90%. Fewer servers equates to less money being spent on power and cooling.

- IT department costs - Beyond the physical costs of purchasing and powering physical servers, the largest cost associated—70%--to an IT department is labor. Although running virtual servers would demand a more specialized IT crew, it would also require a smaller staff which is critical for companies looking to reduce labor costs.

- Line of Business Users - Since the virtual server farm is smaller and easier to maintain, the availability of services is more likely to be guaranteed. Support could be provided quicker thus increasing the productivity of these LOB users.

Corporate Landscape

The current corporate market for creating virtual servers is volatile and involves a few main players. [11]

Major Players

- VMware - The current leader in the virtual server market. In 2007 VMware represented over half of the market for x86 servers and over 80% of large corporate enterprises. Their main products are:

- VMware Infrastrucre - Used to create and manage virtual server environments

- VMware Virtual Center - Used for monitoring

- VMware Lab Manager - Used to create test environments

- VMware Server - Server partitioner

- VMware Converter - Used to convert physical servers to virtual servers

- Microsoft - Microsoft first tried to enter the virtual server market in 2002 but was relatively unsuccessful because purchasers were uneasy about the alternate OS on Microsoft's offering. In 2006 Microsoft launched Virtual Server Manager touting that it could do everything VMware's virtual servers could. Though a bit of a reach, Microsoft did release a free virtual server that supported a Linux based OS which worked to calm the uneasiness felt in 2002. Its main product is:

- Microsoft Windows Server Hyper-V - Not quite as robust as VMware, but it is a direct challenge.

- Citrix / XenSource - Citrix is the leader in the desktop virtualization market with 19% market share. In 2007 Citrix purchased XenSource and is focused on virtualization processes using entirely open source coding. Its main products are:

- XenExpress - Virtualization starter package

- XenServer - Virtualization platform for Windows and Linux servers

- XenEnterprise - Enterprise-wide virtual management tool

These three companies make up the vast majority of the virtual server market and have such a lead that the market landscape should not drastically change in the next several years.

Challenges to Growth

There are several challenges this industry faces if it is to continue its fast growth: [13]

- Security - According to one report, 60% of virtual servers are less secure then the physical machines they replaced. With advances in technologies, that number is sure to decline and is estimated that it should drop to 30%. However, for many enterprises with especially sensitive data and processes it needs to protect, that level of security may be a limiting factor to switching to virtual servers despite the cost advantages in doing so.

- Licensing - This is a challenging situation where the software vendor is still working under the physical server model when the hardware has evolved to virtual servers. In the physical server model an enterprise would need to purchase one license for each machine that needed the piece of software. However in the virtual server world, each machine might have dozens of instances of the same piece of software running on it requiring dozens of licenses to be purchased. This immediately eats away at the money saved from using fewer physical servers.

It appears, however, that software manufactures are starting to change their pricing structure. Microsoft, for example, will allow that its dual core machines will only need one license for their products and that certain customers that have purchased specific versions of the Windows operating system will be able to run up to four copies of that OS on the desktop. However, for large scale enterprises this is a hefty cost that makes the cost/benefit analysis for upgrading to virtual servers difficult to run. - Software - In addition to licensing concerns with virtual software, there is the fact that not all software is able to be virtualized for various technical reasons. Internet Explorer, for instance, is extremely difficult to virtualize. As enterprises begin to use more and more virtual servers, balancing applications that can be run virtually and those that cannot is a challenge.

- IT Technicians - The change to virtual servers requires the IT staff that maintains them to be much more skilled and knowledgeable. Not only is that individual responsible for the hardware that he or she was responsible for before, they must also have the necessary skill set to be able to service a wider array of software that might be found on a virtual machine. For example, one host server might be running instances of Windows and Linux. In the past, that would require at least two servers each with potentially their own technician. As it is not feasible to hire a person for each instance of a different operating system, the enterprise must hire more skilled IT staff.

Although these are all difficult challenges to consider, the analysis of the market conditions suggests that these are not conditions that act as a barrier to entry.

References

- ↑ VMWare, Inc. http://www.vmware.com/ Retrieved 08-07-2010

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 Rosenblum/Garfinkel. "Virtual Machine Monitors: Current Tech and Future Trends." May 2005. Accessed: http://www.stanford.edu/~stinson/paper_notes/virt_machs/vmms.txt.

- ↑ "How are Hypervisor Classified." Accessed: http://www.xen.org/files/Marketing/HypervisorTypeComparison.pdf.

- ↑ 4.0 4.1 "Intel Privileged and Sensitive Instructions." February 1, 2002 . Accessed: http://support.microsoft.com/kb/114473.

- ↑ "What is Xen." Accessed: http://www.xen.org/files/Marketing/WhatisXen.pdf.

- ↑ 6.0 6.1 Lo, Jack. "VMware and Hardware Assist Technology (Intel VT and AMD-V)." 2006. Accessed: http://download3.vmware.com/vmworld/2006/tac9463.pdf.

- ↑ Robin, John Scott "Analysis of the Intel Pentium's Ability to Support a Secure Virtual Machine Monitor." 2000. Accessed: http://www.usenix.org/events/sec2000/full_papers/robin/robin_html/index.html.

- ↑ "A Superior Hardware Platform for Server Virtualization." Accessed: http://download.intel.com/technology/virtualization/320426.pdf .

- ↑ Cox, Russ. "PC Architecture from inside the CPU." 2002. Accessed: http://swtch.com/~rsc/talks/pcarch.pdf.

- ↑ Waldspurger, Carl A. "Memory Resource Management in VMware ESX Server." Dec 2002. Accessed: http://waldspurger.org/carl/papers/esx-mem-osdi02.pdf .

- ↑ 11.0 11.1 Woodward, Kirk. "Virtual Server Market Trends." October, 2009. Accessed: http://www.faulkner.com/products/faulknerlibrary/default.htm.

- ↑ 12.0 12.1 Furness, Victoria. "The Future of Virtualization. Emerging trends and the evolving vendor landscape." Business Insights, October 2009. Accessed: http://www.bi-interactive.com/index.aspx

- ↑ Greiner, Lynn. "Virtual Computing Overview." April, 2010. Accessed: http://www.faulkner.com/products/faulknerlibrary/default.htm.