Randomized controlled trial/Citable Version: Difference between revisions

Pat Palmer (talk | contribs) m (Text replacement - "United States" to "United States of America") |

Pat Palmer (talk | contribs) (duplicate reference definitions removed) |

||

| Line 96: | Line 96: | ||

In an "N of 1" trial, also called a "single-subject randomized" trial, a single patient randomly proceeds through multiple blinded crossover comparisons. This address the concerns that traditional randomized controlled trials may not generalize to a specific patient.<ref name="pmid8616414">{{cite journal |author=Mahon J, Laupacis A, Donner A, Wood T |title=Randomised study of n of 1 trials versus standard practice |journal=BMJ |volume=312 |issue=7038 |pages=1069–74 |year=1996 |pmid=8616414 |doi= |issn=}}</ref> | In an "N of 1" trial, also called a "single-subject randomized" trial, a single patient randomly proceeds through multiple blinded crossover comparisons. This address the concerns that traditional randomized controlled trials may not generalize to a specific patient.<ref name="pmid8616414">{{cite journal |author=Mahon J, Laupacis A, Donner A, Wood T |title=Randomised study of n of 1 trials versus standard practice |journal=BMJ |volume=312 |issue=7038 |pages=1069–74 |year=1996 |pmid=8616414 |doi= |issn=}}</ref> | ||

Underlining the difficulty in extrapolating from large trials to individual patients, Sackett proposed the use of N of 1 randomized controlled trials. In these, the patient is both the treatment group and the placebo group, but at different times. Blinding must be done with the collaboration of the pharmacist, and treatment effects must appear and disappear quickly following introduction and cessation of the therapy. This type of trial can be performed for many chronic, stable conditions.<ref name="pmid3409138">{{cite journal |author=Guyatt G ''et al'' |title=A clinician's guide for conducting randomized trials in individual patients |journal=CMAJ |volume=139 |pages=497–503 |year=1988 |pmid=3409138 |doi=}}</ref> The individualized nature of the single-subject randomized trial, and the fact that it often requires the active participation of the patient (questionnaires, diaries), appeals to the patient and promotes better insight and self-management<ref name="pmid17371593">{{cite journal |author=Brookes ST ''et al.'' |title="Me's me and you's you": Exploring patients' perspectives of single patient (n-of-1) trials in the UK |journal=Trials |volume=8 |pages=10 |year=2007 |pmid=17371593 |doi=10.1186/1745-6215-8-10}}</ref><ref name="pmid8299295">{{cite journal |author=Langer JC ''et al.'' |title=The single-subject randomized trial. A useful clinical tool for assessing therapeutic efficacy in pediatric practice |journal=Clinical Pediatrics |volume=32 |pages=654–7 |year=1993 |pmid=8299295 |doi=}}</ref> as well as patient safety,<ref name="pmid8616414" | Underlining the difficulty in extrapolating from large trials to individual patients, Sackett proposed the use of N of 1 randomized controlled trials. In these, the patient is both the treatment group and the placebo group, but at different times. Blinding must be done with the collaboration of the pharmacist, and treatment effects must appear and disappear quickly following introduction and cessation of the therapy. This type of trial can be performed for many chronic, stable conditions.<ref name="pmid3409138">{{cite journal |author=Guyatt G ''et al'' |title=A clinician's guide for conducting randomized trials in individual patients |journal=CMAJ |volume=139 |pages=497–503 |year=1988 |pmid=3409138 |doi=}}</ref> The individualized nature of the single-subject randomized trial, and the fact that it often requires the active participation of the patient (questionnaires, diaries), appeals to the patient and promotes better insight and self-management<ref name="pmid17371593">{{cite journal |author=Brookes ST ''et al.'' |title="Me's me and you's you": Exploring patients' perspectives of single patient (n-of-1) trials in the UK |journal=Trials |volume=8 |pages=10 |year=2007 |pmid=17371593 |doi=10.1186/1745-6215-8-10}}</ref><ref name="pmid8299295">{{cite journal |author=Langer JC ''et al.'' |title=The single-subject randomized trial. A useful clinical tool for assessing therapeutic efficacy in pediatric practice |journal=Clinical Pediatrics |volume=32 |pages=654–7 |year=1993 |pmid=8299295 |doi=}}</ref> as well as patient safety,<ref name="pmid8616414"/> in a cost-effective manner. | ||

===Noninferiority and equivalence randomized trials=== | ===Noninferiority and equivalence randomized trials=== | ||

| Line 121: | Line 121: | ||

# "The treatment offers ancillary advantages in safety, tolerability, cost, or convenience." | # "The treatment offers ancillary advantages in safety, tolerability, cost, or convenience." | ||

Noninferiority and equivalence randomized trial are difficult to execute well.<ref name="pmid16818930" | Noninferiority and equivalence randomized trial are difficult to execute well.<ref name="pmid16818930"/> Guidelines exists for noninferiority and equivalence randomized trials.<ref name="pmid16522836">{{cite journal |author=Piaggio G, Elbourne DR, Altman DG, Pocock SJ, Evans SJ |title=Reporting of noninferiority and equivalence randomized trials: an extension of the CONSORT statement |journal=JAMA |volume=295 |issue=10 |pages=1152–60 |year=2006 |pmid=16522836 |doi=10.1001/jama.295.10.1152 |issn=}}</ref> In planning the trial, the investigators should choose "a noninferiority or equivalence criterion, and specifying the margin of equivalence with the rationale for its choice."<ref name="pmid16522836"/> In reporting the outcomes, the authors should present the confidence intervals to show the reader whether the result was within their noninferiority or equivalence criterion.<ref name="pmid16522836"/> | ||

===Add-on design=== | ===Add-on design=== | ||

| Line 184: | Line 184: | ||

There are times when placebo control is appropriate even when there is accepted, effective treatment.<ref name="pmid10975964">{{cite journal |author=Temple R, Ellenberg SS |title=Placebo-controlled trials and active-control trials in the evaluation of new treatments. Part 1: ethical and scientific issues |journal=Ann Intern Med |volume=133 |pages=455–63 |year=2000 |pmid=10975964 |doi=|url=http://www.annals.org/cgi/content/full/133/6/455}}</ref><ref name="pmid10975965">{{cite journal |author=Ellenberg SS, Temple R |title=Placebo-controlled trials and active-control trials in the evaluation of new treatments. Part 2: practical issues and specific cases |journal=Ann Intern Med |volume=133 |pages=464–70 |year=2000 |pmid=10975965 |doi=|url=http://www.annals.org/cgi/content/full/133/6/464}}</ref><ref name="pmid11565527">{{cite journal |author=Emanuel EJ, Miller FG |title=The ethics of placebo-controlled trials--a middle ground |journal=N. Engl. J. Med. |volume=345 |issue=12 |pages=915–9 |year=2001 |pmid=11565527 |doi=}}</ref> | There are times when placebo control is appropriate even when there is accepted, effective treatment.<ref name="pmid10975964">{{cite journal |author=Temple R, Ellenberg SS |title=Placebo-controlled trials and active-control trials in the evaluation of new treatments. Part 1: ethical and scientific issues |journal=Ann Intern Med |volume=133 |pages=455–63 |year=2000 |pmid=10975964 |doi=|url=http://www.annals.org/cgi/content/full/133/6/455}}</ref><ref name="pmid10975965">{{cite journal |author=Ellenberg SS, Temple R |title=Placebo-controlled trials and active-control trials in the evaluation of new treatments. Part 2: practical issues and specific cases |journal=Ann Intern Med |volume=133 |pages=464–70 |year=2000 |pmid=10975965 |doi=|url=http://www.annals.org/cgi/content/full/133/6/464}}</ref><ref name="pmid11565527">{{cite journal |author=Emanuel EJ, Miller FG |title=The ethics of placebo-controlled trials--a middle ground |journal=N. Engl. J. Med. |volume=345 |issue=12 |pages=915–9 |year=2001 |pmid=11565527 |doi=}}</ref> | ||

There are ethical concerns in comparing a surgical intervention to sham surgery; however, this has been done.<ref>Cobb LA, Thomas GI, Dillard DH, Merendino KA, Bruce RA: An evaluation of internal-mammary-artery ligation by a double-blind technique. N Engl J Med 1959;260:1115-1118.</ref><ref name="pmid12110735" | There are ethical concerns in comparing a surgical intervention to sham surgery; however, this has been done.<ref>Cobb LA, Thomas GI, Dillard DH, Merendino KA, Bruce RA: An evaluation of internal-mammary-artery ligation by a double-blind technique. N Engl J Med 1959;260:1115-1118.</ref><ref name="pmid12110735"/> Guidelines by the [[American Medical Association]] address the use of placebo surgery.<ref name="pmid11807373">{{cite journal |author=Tenery R, Rakatansky H, Riddick FA, ''et al'' |title=Surgical "placebo" controls |journal=Ann. Surg. |volume=235 |issue=2 |pages=303–7 |year=2002 |pmid=11807373 |doi=}}</ref> | ||

===Interim analysis - stopping trials early=== | ===Interim analysis - stopping trials early=== | ||

| Line 211: | Line 211: | ||

====Seeding trials==== | ====Seeding trials==== | ||

Seeding trials are studies sponsored by industry whose "apparent purpose is to test a hypothesis. The true purpose is to get physicians in the habit of prescribing a new drug."<ref name="pmid18711155">{{cite journal |author=Hill KP, Ross JS, Egilman DS, Krumholz HM |title=The ADVANTAGE seeding trial: a review of internal documents |journal=Ann. Intern. Med. |volume=149 |issue=4 |pages=251–8 |year=2008 |month=August |pmid=18711155 |doi= |url=http://www.annals.org/cgi/pmidlookup?view=long&pmid=18711155 |issn=}}</ref> Examples include the ADVANTAGE<ref name="pmid18711155" | Seeding trials are studies sponsored by industry whose "apparent purpose is to test a hypothesis. The true purpose is to get physicians in the habit of prescribing a new drug."<ref name="pmid18711155">{{cite journal |author=Hill KP, Ross JS, Egilman DS, Krumholz HM |title=The ADVANTAGE seeding trial: a review of internal documents |journal=Ann. Intern. Med. |volume=149 |issue=4 |pages=251–8 |year=2008 |month=August |pmid=18711155 |doi= |url=http://www.annals.org/cgi/pmidlookup?view=long&pmid=18711155 |issn=}}</ref> Examples include the ADVANTAGE<ref name="pmid18711155"/> and STEPS<ref name="pmid21709111">{{cite journal| author=Krumholz SD, Egilman DS, Ross JS| title=Study of neurontin: titrate to effect, profile of safety (STEPS) trial: a narrative account of a gabapentin seeding trial. | journal=Arch Intern Med | year= 2011 | volume= 171 | issue= 12 | pages= 1100-7 | pmid=21709111 | doi=10.1001/archinternmed.2011.241 | pmc= | url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&tool=sumsearch.org/cite&retmode=ref&cmd=prlinks&id=21709111 }} </ref> trials. | ||

==Measuring outcomes== | ==Measuring outcomes== | ||

| Line 344: | Line 344: | ||

==References== | ==References== | ||

<references/> | <small> | ||

<references> | |||

</references> | |||

</small> | |||

==External links== | ==External links== | ||

* [http://grants2.nih.gov/grants/policy/hs/ U.S. National Institutes of Health: Research Involving Human Subjects] | * [http://grants2.nih.gov/grants/policy/hs/ U.S. National Institutes of Health: Research Involving Human Subjects] | ||

Latest revision as of 07:39, 31 October 2024

"A clinical trial is defined as a prospective scientific experiment that involves human subjects in whom treatment is initiated for the evaluation of a therapeutic intervention. In a randomized controlled clinical trial, each patient is assigned to receive a specific treatment intervention by a chance mechanism."[1] The theory behind these trials is that the value of a treatment will be shown in an objective way, and, though usually unstated, there is an assumption that the results of the trial will be applicable to the care of patients who have the condition that was treated.

Medical research answers many questions about health, illness, and treatment options. Evaluating new medicines and other treatments may involve research using randomized controlled trials [(RCT)]. In such trials the participants who receive the treatment under study are assigned [to different treatments] at random (by chance, like the flip of a coin). This is necessary to ensure that the outcomes are determined only by the treatment under study and not by other factors that could otherwise influence treatment assignment. Other participants who, by the randomization process, serve as controls receive a standard treatment or placebo treatment (a pill or procedure that does not include active ingredients).[2]

Trials of potential treatments, for ethical reasons, tend to involve multiple stages, starting with small safety tests of the drug or other therapy. Once there is evidence of safety, and preliminary clinical trials, the effort moves to a larger scale: large multicentre clinical trials that are randomized, controlled, and double-blind using a control group of patients (i.e., "arm" of the trial) and an experimental arm.

Trials should be large, so that serious adverse events might be detected even when they occur rarely. Multi-centre trials minimize problems that can arise when a single geographical locus has a population that is not fully representative of the global population, and they can minimize the effect of geographical variations in environment and health care delivery. Randomization (if the study population is large enough) should mean that the study groups are unbiased. A double-blind trial is one in which neither the patient nor the deliverer of the treatment is aware of the nature of the treatment offered to any particular individual, and this avoids bias caused by the expectations of either the doctor or the patient.

Major developments in randomized controlled trials

International Conference on Harmonization

In 1990, the steering committee was formed for the International Conference on Harmonization.[3] This led to the recommendations for Good Clinical Practices in the conduct of trials.[4] Some have considered these guidelines to be burdensome.[5]

In the United States of America, Good Clinical Practices is overseen by the Food and Drug Administration (http://www.fda.gov/ScienceResearch/SpecialTopics/RunningClinicalTrials/).

Consort Statement

The quality of reporting of the conduct of randomized controlled trials remains problematic.[6]

In 1996, the Consort Statement was published which improved how trials are reported.[7] The statement was revised in 2001[8] and 2010[9].

As of 2005, among high impact journals, "CONSORT was mentioned in the instructions of 36 (22%) journals (see bmj.com), more often in general and internal medicine journals (8/15; 53%) than in specialty journals (28/152; 18%)".[10] Changes in 2010 includes reporting of trial registration, availability of the original protocol, and funding. The quality of reporting of trials in psychiatry may be better since introduction of the CONSORT statement.[11]

Trial registration

In 2004, the International Committee of Medical Journal Editors (ICMJE) announced that all trials starting enrollment after July 1, 2005 must be registered prior to consideration for publication in one of the 12 member journals of the Committee.[12]

Disclosure of Competing Interests

In 2009, the International Committee of Medical Journal Editors (ICMJE) announced a Uniform Format for Disclosure of Competing Interests in ICMJE Journals.[13]

Control group

"Control", according to the current Declaration of Helsinki ethical guides, may or may not involve a placebo. If there is no accepted treatment, or the disease is mild and self-limiting, placebo controls may be ethical. If there is accepted treatment, the best accepted treatment becomes the control arm. Trials that are controlled, but not by a placebo, still generate arguments about information value versus ethics.

Placebo controls are important, because the placebo effect can often be strong. The more value a subject believes an unknown drug has, the more placebo effect is has.[14] The use of historical rather than concurrent controls may lead to exaggerated estimation of effect.[15]

The placebo effect can be seen in controlled trials of surgical interventions with the control group receiving a sham procedure.[16][17][18]

The Hawthorne effect is the improvements seen in control subjects simply from participating in research.[19]

Blinding

Blinding, where neither participants nor experimenters know what treatment the participants are receiving, is important, especially for trials that have subjective outcomes.[20] Trials that are not blinded tend to report more favorable results.[21]

Variations in design

Cluster-randomized trials

In some settings, health care providers, or healthcare institutions should be randomized rather than randomizing the research subjects.[22] This should occur when the intervention targets the provider or institutions and thus the results from each subject are not truly independent, but will cluster within the health care provider or healthcare institution. Guidelines exist for conducting cluster randomised trials.[23] Cluster-randomized trials are not always correctly designed and executed.[24]

Designing an adequately sized cluster-randomized trial is based on several factors. One factor is the intraclass (intracluster) correlation coefficient (ICC).[25][26] The ICC between clusters is analogous to the variance between subjects in a randomized controlled trial. Just as when randomized controlled trials exhibiting high variance between subjects means a larger study is needed, less correlation between clusters indicates more clusters are needed.

Before-after studies

Uncontrolled before-after studies and controlled before-after studies probably should not be considered variations of a randomized controlled trial, yet if carefully done offer advantages to observational studies.[27] As in a true cluster-randomized trial, the intervention group can be randomly assigned; however, unlike a cluster-randomized trial, the before-after study does not have enough clusters or groups. An interrupted time series analysis can try to improve plausibility of causation; however, interrupted time series are commonly performed incorrectly.[28]

Crossover trial

In crossover trials, patients start in intervention and controls, but later all patients switch groups.[29][30]

Delayed start trial

In this design, one group is randomized to receive treatment before the control group.[31] This design allows analysis for disease-modifying effects of treatments. An example of this design was a trial of rasagiline for Parkinson Disease.[32]

Dose ranging studies

Various study designs exit to determine the optimal dose of a new drug.

- Crossover designs have been used.[33]

- Designs that test doses in sequence, such as up and down studies[34] and adaptive dose-ranging trials[35], depend upon being able to measure an outcome from the medication that occurs quickly, and the drug's dose can be titrated to achieve the effect.[36][37] An example of this type of outcome is blood pressure.

- Parallel designs such as multi-level factorial studies have been used.[38] Special sample size calculations exist for these designs.[38]

Factorial design

| Chlordiazepoxide | |||

|---|---|---|---|

| Given | Not given | ||

| Propranolol | Given | 1 | 4 |

| Not given | 0 | 4 | |

| Notes: 1. There were 15 patients in each group. | |||

A factorial design allows two interventions to be be studied with ability to measure the treatment effect of each intervention in isolation and in combination.[40][41] Interaction between the two treatments can be displayed with "interaction ratios or odds ratios accompanied by their confidence intervals"[40] and can be measured with the method of Gail and Simon[42] or the Breslow-Day test for homogeneity.

Many examples of factorial trials exist.[43] [44]

N of 1 trial

In an "N of 1" trial, also called a "single-subject randomized" trial, a single patient randomly proceeds through multiple blinded crossover comparisons. This address the concerns that traditional randomized controlled trials may not generalize to a specific patient.[45]

Underlining the difficulty in extrapolating from large trials to individual patients, Sackett proposed the use of N of 1 randomized controlled trials. In these, the patient is both the treatment group and the placebo group, but at different times. Blinding must be done with the collaboration of the pharmacist, and treatment effects must appear and disappear quickly following introduction and cessation of the therapy. This type of trial can be performed for many chronic, stable conditions.[46] The individualized nature of the single-subject randomized trial, and the fact that it often requires the active participation of the patient (questionnaires, diaries), appeals to the patient and promotes better insight and self-management[47][48] as well as patient safety,[45] in a cost-effective manner.

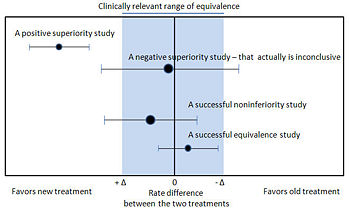

Noninferiority and equivalence randomized trials

As stated in The Declaration of Helsinki by the World Medical Association it is unethical to give any patient a placebo treatment if an existing treatment option is known to be beneficial.[49][50] Many scientists and ethicists consider that the U.S. Food and Drug Administration, by demanding placebo-controlled trials, encourages the systematic violation of the Declaration of Helsinki.[51] In addition, the use of placebo controls remains a convenient way to avoid direct comparisons with a competing drug.

The appropriate use of placebo is being revised.[52][53] When guidelines suggest a placebo is an unethical control, then an "active-control noninferiority trial" may be used.[54] To establish non-inferiority, the following three conditions should be - but frequently are not - established:[54]

- "The treatment under consideration exhibits therapeutic noninferiority to the active control."

- "The treatment would exhibit therapeutic efficacy in a placebo-controlled trial if such a trial were to be performed."

- "The treatment offers ancillary advantages in safety, tolerability, cost, or convenience."

Noninferiority and equivalence randomized trial are difficult to execute well.[54] Guidelines exists for noninferiority and equivalence randomized trials.[55] In planning the trial, the investigators should choose "a noninferiority or equivalence criterion, and specifying the margin of equivalence with the rationale for its choice."[55] In reporting the outcomes, the authors should present the confidence intervals to show the reader whether the result was within their noninferiority or equivalence criterion.[55]

Add-on design

"Sometimes a new agent can be assessed by using an 'add-on' study design in which all patients are given standard therapy and are randomly assigned to also receive either new agent or placebo."[52]

Pragmatic trials

Consort has described pragmatic trials and standards for their reporting.[56] CONSORT describes a pragmatic trial as addressing "does the intervention work when used in normal practice" as opposed to an "explanatory trial" that addresses "can the intervention work." CONSORT states that this is not a dichotomy of trial designs, but that pragmatic trials reflect an attitude in the design. CONSORT offers an example of a pragmatic trial[57] and an explanatory trial.[58]

The cohort multiple randomized controlled trial may be a practical variation of this design.[59]

Ethical issues

Background

The Belmont Report was released in 1979 by the National Commission for the Protection of Human Subjects in Biomedical and Behavioral Research.[60][61] The three Belmont principles are beneficence, justice and respect for persons.

The Declaration of Helsinki requires informed consent for participation in a trial. In the United States, there is an approval procedure for clinical trials in human subjects, whether for research only or for potential approval of a commercial drug. Most industrialized countries have such procedures; some permit reciprocal approvals.

In the United States, the Code of Federal Regulations (45 CFR 46.102(d) or http://www.access.gpo.gov/nara/cfr/waisidx_02/45cfr46_02.html) regulates human subjects research. Definition from the Code are:

- "Research means a systematic investigation, including research development, testing and evaluation, designed to develop or contribute to generalizable knowledge. Activities which meet this definition constitute research for purposes of this policy, whether or not they are conducted or supported under a program which is considered research for other purposes. For example, some demonstration and service programs may include research activities."

- Human subject means a living individual about whom an investigator (whether professional or student) conducting research obtains:

- Data through intervention or interaction with the individual, or

- Identifiable private information.

In the United States, the Code of Federal Regulations defines:

- Research that may be exempt from IRB approval (45 CFR 46.101(b))

- Research that may be eligible for expedited IRB approval (45 CFR 46.110)

- The informed consent process (45 CFR 46.116)

Ethics of randomizing subjects

Determining the justification for experimentation can be difficult.[62]

The appropriate use of placebo is being revised.[52][53][63] One tension is the balance between using placebo to increase scientific rigor versus the unnessessary deprival of active treatment to patients. This is the conundrum faced by Martin Arrowsmith in the book by the same name.[64][65]

Comparing a new intervention to a placebo control may not be ethical when an accepted, effective treatment exists. In this case, the new intervention should be compared to the active control to establish whether the standard of care should change.[66] The observation that industry sponsored research may be more likely to conduct trials that have positive results suggest that industry is not picking the most appropriate comparison group.[67] However, it is possible that industry is better at predicting which new innovations are likely to be successful and discontinuing research for less promising interventions before the trial stage.

There are times when placebo control is appropriate even when there is accepted, effective treatment.[52][53][63]

There are ethical concerns in comparing a surgical intervention to sham surgery; however, this has been done.[68][18] Guidelines by the American Medical Association address the use of placebo surgery.[69]

Interim analysis - stopping trials early

Trials are increasingly stopped early[70]; however, this may induce a bias that exaggerates results[71][70]. Data safety and monitoring boards that are independent of the trial are commissioned to conduct interim analyses and make decisions about stopping trials early.[72] [73]

Reasons to stop a trial early are efficacy, safety, and futility.[74][75]

Regarding efficacy, various rules exist that adjust alpha to decide when to stop a trial early.[76][77][78][79][80] A commonly recommended rules are the O'Brien-Fleming (the O'Brien-Fleming rule requires a varying p-value depending on the number of interim analyses) and the Haybittle-Peto (the Haybittle-Peto which requires p<0.001 to stop a trial early) rule.[76][77][81]

Using a more conservative stopping rule reduces the chance of a statistical alpha (Type I) error; however, these rules do not alter that the effect size may be exaggerated.[82][77] According to Bassler, "the more stringent the P-value threshold results must cross to justify stopping the trial, the more likely it is that a trial stopped early will overestimate the treatment effect."[75] A review of trials stopped early found that the earlier a trial was stopped the larger was its reported treatment effect[70], especially if the trial had less than 500 total events[83]. Accordingly, examples exist of trials whose interim analyses were significant, but the trial was continued and the final analysis was less significant or was insignificant.[84][85][86]

Methods to correct for exaggeration exists.[87][82] A Bayesian approach to interim analysis may help reduce bias and adjust the estimate of effect.[88]

As an alternative to the alpha rules, conditional power can help decide when to stop trials early.[89][90]

Unnecessary trials

Some trials may be unnecessary because their hypotheses have already been established.[91] Using cumulative meta-analysis, 25 of 33 randomized controlled trials of streptokinase for the treatment of acute myocardial infarction were unnecessary.[92]

Authors of trials may fail to cite prior trials.[93]

Cumulative meta-analysis prior to a new trial may indicate trials that do not need to be executed.[94]

Seeding trials

Seeding trials are studies sponsored by industry whose "apparent purpose is to test a hypothesis. The true purpose is to get physicians in the habit of prescribing a new drug."[95] Examples include the ADVANTAGE[95] and STEPS[96] trials.

Measuring outcomes

Subjectively assessed outcomes are more susceptible to bias in trials with inadequate allocation concealment.[97]

Missing data

Several approaches to handling missing data have been reviewed.[98] Regarding assigning an outcome to the patient, using a 'last observation carried forward' (LOCF) analysis may introduce biases.[99]

Crossover between experimental groups

Regarding group assignment, a 'per protocol' analysis may introduce bias compared to an 'intention to treat' analysis. Intention to treat is not always adequately described.[100][101] This bias may be due to the observations that research subjects who adhere to therapy, whether the therapy be an active treatment or placebo, have a reduction in mortality.[102][103] Thus excluding non-compliant subjects would appear to exaggerate the effects of treatment; however the bias can go both directions.[104] Analysis options exist.[105]

Surrogate outcomes

The costs and efforts required to measure primary endpoints such as morbidity and mortality make using surrogate outcomes an option. An example is in the treatment of osteoporosis, the primary outcomes are fractures and mortality whereas the surrogate outcome is changes in bone mineral density.[106][107] Other examples of surrogate outcomes are tumor shrinkage or changes in cholesterol level, blood pressure, HbA1c, CD4 cell count.[108] Surrogate markers might be acceptable when "the surrogate must be a correlate of the true clinical outcome and fully capture the net effect of treatment on the clinical outcome".[108] However, surrogate outcomes and their rationale may not be adequately described in trials.[109]

Composite outcomes

Composite outcomes are problematic if the components of the composite vary in their magnitude and contribution to the composite.[110][111] [112] [113]

Overdiagnosis

In trials of mass screening, overdiagnosis is the diagnosis of non-harmful disease. [114] Overdiagnosis inflates the importance of the screening problem.

Adverse outcomes

Adverse effects may be discovered only after a trial is concluded, or in the larger populations to which a drug, approved for general release based on trial data, is used. Postmarketing surveillance is intended to detect hazards that the trials are not powerful enough to find.

Analysis

Dichotomous outcomes may be summarized with relative risk reduction, absolute risk reduction, or the number needed to treat.

Planning analyses prior to conducting the trial

When protocols for trials are publicly accessible, discrepancies between planned and actual analyses may occur.[115][116]

Subgroup analyses

Subgroup analyses can be misleading due to failure to prespecify hypotheses and to account for multiple comparisons.[117][77][118]

When multiple subgroups are present, examining subgroups with a multivariate risk score may be better than examining subgroups one-variable-at-at-time which may exaggerate the role of subgroups.[119]

Statistical methods[120] and criteria[121] exist for assessing the importance of subgroups. In brief, testing the statistical significance of an interaction (e.g. statistical heterogeneity) between subgroups and a relative measure of outcome. variation in the relative measure.[121]

The following criteria have been proposed for interpreting the p-value from the test of statistical significance:[121]

- P > 0.1 indicates skepticism of a subgroup effect

- P is between 0.1 and 0.01 suggests to "consider the hypothesis"

- P less than or equal to 0.001 "take the hypothesis seriously"

Assessing the quality of a trial

Numerous scales and checklists for assessing quality have been proposed.[122] A comparison of the Cochrane Collaboration Tool, the Jadad score, and the Schulz approach found that the Cochrane Collaboration Tool required more time to complete, contained some subjective items, and did not well correlate with the Jadad score or Schulz approach.[123]

Cochrane bias scale

The Cochrane Collaboration uses a six item tool.[124] Trials that have high or uncertain bias are more likely to report large effect sizes.[123]

In using the Cochrane tool, if any of the first three items are low quality (randomization, allocation concealment before and during enrollment, blinding), the trial may be considered high or uncertain bias.[125]

Jadad score

The Jadad score may be used to assess quality.[126] The scale focuses on three attributes: randomization, blinding, and dropouts.

Schulz

The Schulz approach assess the quality of allocation concealment.[127]

Studies of characteristics of trails

Large studies may not be associated with better quality.[128]

Publication bias

Publication bias refers to the tendency that trials that show a positive significant effect are more likely to be published than those that show no effect or are inconclusive.

Trial registration

At the same time, in September 2004, the International Committee of Medical Journal Editors (ICMJE) announced that all trials starting enrollment after July 1, 2005 must be registered prior to consideration for publication in one of the 12 member journals of the Committee.[12] This move was to reduce the risk of publication bias as negative trials that are unpublished would be more easily discoverable.

Available trial registries include:

- http://clinicaltrials.gov

- World Health Organization's International Clinical Trial Registry Platform (ICTRP)

- http://isrctn.org/

It is not clear how effective trial registration is because many registered trials are never completely published.[129]

External validation

Judging external validity is more difficult than judging internal validity. "External validity refers to the question whether results are generalizable to persons other than the population in the original study."[130] A framework for assessing external validity proposes:[130]

- "The study population might not be representative for the eligibility criteria that were intended. It should be addressed whether the study population differs from the intended source population with respect to characteristics that influence outcome."

- "The target population will, by definition, differ from the study population with respect to geographical, temporal and ethnical conditions. Pondering external validity means asking the question whether these differences may influence study results."

- "It should be assessed whether the study's conclusions can be generalized to target populations that do not meet all the eligibility criteria."

References

- ↑ Stanley K (2007). "Design of randomized controlled trials". Circulation 115 (9): 1164–9. DOI:10.1161/CIRCULATIONAHA.105.594945. PMID 17339574. Research Blogging.

- ↑ Torpy JM, Lynm C, Glass RM. (2010) Randomized Controlled Trials. JAMA 303(12):1216.

- ↑ A brief History of ICH

- ↑ INTERNATIONAL CONFERENCE ON HARMONISATION OF TECHNICAL REQUIREMENTS FOR REGISTRATION OF PHARMACEUTICALS FOR HUMAN USE GUIDELINE FOR GOOD CLINICAL PRACTICE

- ↑ McMahon AD, Conway DI, Macdonald TM, McInnes GT (2009). "The unintended consequences of clinical trials regulations.". PLoS Med 3 (11): e1000131. DOI:10.1371/journal.pmed.1000131. PMID 19918557. Research Blogging.

- ↑ Hopewell, Sally; Susan Dutton, Ly-Mee Yu, An-Wen Chan, Douglas G Altman (2010-03-23). "The quality of reports of randomised trials in 2000 and 2006: comparative study of articles indexed in PubMed". BMJ 340 (mar23_1): c723. DOI:10.1136/bmj.c723. Retrieved on 2010-03-25. Research Blogging.

- ↑ Begg C, Cho M, Eastwood S, et al (August 1996). "Improving the quality of reporting of randomized controlled trials. The CONSORT statement". JAMA 276 (8): 637–9. PMID 8773637. [e]

- ↑ Schulz KF et a. Moher D, Schulz KF, Altman D (April 2001). "The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials". JAMA 285 (15): 1987–91. PMID 11308435. [e]

- ↑ CONSORT 2010 Statement: Updated Guidelines for Reporting Parallel Group Randomized Trials Ann Intern MedDOI:10.1059/0003-4819-152-11-201006010-00232

- ↑ Altman DG (May 2005). "Endorsement of the CONSORT statement by high impact medical journals: survey of instructions for authors". BMJ 330 (7499): 1056–7. DOI:10.1136/bmj.330.7499.1056. PMID 15879389. PMC 557224. Research Blogging.

- ↑ Han C, Kwak KP, Marks DM, Pae CU, Wu LT, Bhatia KS et al. (2009). "The impact of the CONSORT statement on reporting of randomized clinical trials in psychiatry.". Contemp Clin Trials 30 (2): 116-22. DOI:10.1016/j.cct.2008.11.004. PMID 19070681. Research Blogging.

- ↑ 12.0 12.1 De Angelis C, Drazen JM, Frizelle FA, et al (September 2004). "Clinical trial registration: a statement from the International Committee of Medical Journal Editors". The New England journal of medicine 351 (12): 1250–1. DOI:10.1056/NEJMe048225. PMID 15356289. Research Blogging.

- ↑ Drazen JM, Van Der Weyden MB, Sahni P, Rosenberg J, Marusic A, Laine C et al. (2009). "Uniform Format for Disclosure of Competing Interests in ICMJE Journals.". N Engl J Med. DOI:10.1056/NEJMe0909052. PMID 19825973. Research Blogging.

- ↑ Waber, Rebecca L., Baba Shiv, Ziv Carmon, and Dan Ariely. 2008. Commercial Features of Placebo and Therapeutic Efficacy. JAMA 299, no. 9:1016-1017.

- ↑ Sacks H, Chalmers TC, Smith H (February 1982). "Randomized versus historical controls for clinical trials". Am. J. Med. 72 (2): 233–40. PMID 7058834. [e]

- ↑ Cobb LA, Thomas GI, Dillard DH, Merendino KA, Bruce RA (May 1959). "An evaluation of internal-mammary-artery ligation by a double-blind technic". N. Engl. J. Med. 260 (22): 1115–8. PMID 13657350. [e]

- ↑ Dimond EG, Kittle CF, Crockett JE (April 1960). "Comparison of internal mammary artery ligation and sham operation for angina pectoris". Am. J. Cardiol. 5: 483–6. PMID 13816818. [e]

- ↑ 18.0 18.1 Moseley JB, O'Malley K, Petersen NJ, et al (July 2002). "A controlled trial of arthroscopic surgery for osteoarthritis of the knee". N. Engl. J. Med. 347 (2): 81–8. DOI:10.1056/NEJMoa013259. PMID 12110735. Research Blogging.

- ↑ McCarney R, Warner J, Iliffe S, van Haselen R, Griffin M, Fisher P (2007). "The Hawthorne Effect: a randomised, controlled trial". BMC Med Res Methodol 7: 30. DOI:10.1186/1471-2288-7-30. PMID 17608932. PMC 1936999. Research Blogging.

- ↑ Wood L, Egger M, Gluud LL, Schulz KF, Jüni P, Altman DG et al. (2008). "Empirical evidence of bias in treatment effect estimates in controlled trials with different interventions and outcomes: meta-epidemiological study.". BMJ 336 (7644): 601-5. DOI:10.1136/bmj.39465.451748.AD. PMID 18316340. PMC PMC2267990. Research Blogging.

- ↑ Moher D, Pham B, Jones A, Cook DJ, Jadad AR, Moher M et al. (1998). "Does quality of reports of randomised trials affect estimates of intervention efficacy reported in meta-analyses?". Lancet 352 (9128): 609-13. DOI:10.1016/S0140-6736(98)01085-X. PMID 9746022. Research Blogging.

- ↑ Wears RL (2002). "Advanced statistics: statistical methods for analyzing cluster and cluster-randomized data". Academic emergency medicine : official journal of the Society for Academic Emergency Medicine 9 (4): 330–41. PMID 11927463. [e]

- ↑ Campbell MK, Elbourne DR, Altman DG (2004). "CONSORT statement: extension to cluster randomised trials". BMJ 328 (7441): 702–8. DOI:10.1136/bmj.328.7441.702. PMID 15031246. Research Blogging.

- ↑ Eldridge, S., Ashby, D., Bennett, C., Wakelin, M., & Feder, G. (2008). Internal and external validity of cluster randomised trials: systematic review of recent trials. BMJ, http://www.bmj.com/cgi/content/full/bmj.39517.495764.25v1 DOI:10.1136/bmj.39517.495764.25 10.1136/bmj.39517.495764.25.

- ↑ Campbell MK, Fayers PM, Grimshaw JM (2005). "Determinants of the intracluster correlation coefficient in cluster randomized trials: the case of implementation research". Clin Trials 2 (2): 99–107. PMID 16279131. [e]

- ↑ Campbell M, Grimshaw J, Steen N (2000). "Sample size calculations for cluster randomised trials. Changing Professional Practice in Europe Group (EU BIOMED II Concerted Action)". J Health Serv Res Policy 5 (1): 12–6. PMID 10787581. [e]

- ↑ Wyatt JC, Wyatt SM (2003). "When and how to evaluate health information systems?". Int J Med Inform 69 (2-3): 251–9. DOI:10.1016/S1386-5056(02)00108-9. PMID 12810128. Research Blogging.

- ↑ Ramsay CR, Matowe L, Grilli R, Grimshaw JM, Thomas RE (2003). "Interrupted time series designs in health technology assessment: lessons from two systematic reviews of behavior change strategies". Int J Technol Assess Health Care 19 (4): 613–23. PMID 15095767. [e]

- ↑ Mills EJ, Chan AW, Wu P, Vail A, Guyatt GH, Altman DG (2009). "Design, analysis, and presentation of crossover trials.". Trials 10: 27. DOI:10.1186/1745-6215-10-27. PMID 19405975. PMC PMC2683810. Research Blogging.

- ↑ Sibbald B, Roberts C (1998). "Understanding controlled trials. Crossover trials". BMJ 316 (7146): 1719. PMID 9614025. [e]

- ↑ D'Agostino, Ralph B., Sr. The Delayed-Start Study Design N Engl J Med 2009 361: 1304-1306 PMID 19776413

- ↑ Olanow, C. Warren, Rascol, Olivier, Hauser, Robert, Feigin, Paul D., Jankovic, Joseph, Lang, Anthony, Langston, William, Melamed, Eldad, Poewe, Werner, Stocchi, Fabrizio, Tolosa, Eduardo, the ADAGIO Study Investigators, A Double-Blind, Delayed-Start Trial of Rasagiline in Parkinson's Disease N Engl J Med 2009 361: 1268-1278 PMID 19776408

- ↑ Sheiner LB, Beal SL, Sambol NC (July 1989). "Study designs for dose-ranging". Clin. Pharmacol. Ther. 46 (1): 63–77. PMID 2743708. [e]

- ↑ Ivanova A, Montazer-Haghighi A, Mohanty SG, Durham SD (January 2003). "Improved up-and-down designs for phase I trials". Stat Med 22 (1): 69–82. DOI:10.1002/sim.1336. PMID 12486752. Research Blogging.

- ↑ Bornkamp B, Bretz F, Dmitrienko A, et al. (2007). "Innovative approaches for designing and analyzing adaptive dose-ranging trials". J Biopharm Stat 17 (6): 965–95. DOI:10.1080/10543400701643848. PMID 18027208. Research Blogging.

- ↑ Zohar S, Chevret S (February 2003). "Phase I (or phase II) dose-ranging clinical trials: proposal of a two-stage Bayesian design". J Biopharm Stat 13 (1): 87–101. PMID 12635905. [e]

- ↑ Hunsberger S, Rubinstein LV, Dancey J, Korn EL (July 2005). "Dose escalation trial designs based on a molecularly targeted endpoint". Stat Med 24 (14): 2171–81. DOI:10.1002/sim.2102. PMID 15909289. Research Blogging.

- ↑ 38.0 38.1 Hung HM (August 2000). <2079::AID-SIM535>3.0.CO;2-I "Evaluation of a combination drug with multiple doses in unbalanced factorial design clinical trials". Stat Med 19 (16): 2079–87. PMID 10931512. [e]

- ↑ Zilm DH, Jacob MS, MacLeod SM, Sellers EM, Ti TY (1980). "Propranolol and chlordiazepoxide effects on cardiac arrhythmias during alcohol withdrawal". Alcohol. Clin. Exp. Res. 4 (4): 400-5. PMID 7004240. [e]

- ↑ 40.0 40.1 McAlister FA, Straus SE, Sackett DL, Altman DG (May 2003). "Analysis and reporting of factorial trials: a systematic review". JAMA 289 (19): 2545–53. DOI:10.1001/jama.289.19.2545. PMID 12759326. Research Blogging.

- ↑ Stampfer MJ, Buring JE, Willett W, Rosner B, Eberlein K, Hennekens CH (1985). "The 2 x 2 factorial design: its application to a randomized trial of aspirin and carotene in U.S. physicians". Stat Med 4 (2): 111–6. DOI:10.1002/sim.4780040202. PMID 4023472. Research Blogging.

- ↑ Gail M, Simon R (1985). "Testing for qualitative interactions between treatment effects and patient subsets.". Biometrics 41 (2): 361-72. PMID 4027319.

- ↑ Sacks FM, Bray GA, Carey VJ, Smith SR, Ryan DH, Anton SD et al. (2009). "Comparison of weight-loss diets with different compositions of fat, protein, and carbohydrates.". N Engl J Med 360 (9): 859-73. DOI:10.1056/NEJMoa0804748. PMID 19246357. PMC PMC2763382. Research Blogging. Review in: Evid Based Nurs. 2009 Oct;12(4):109

- ↑ COIITSS Study Investigators. Annane D, Cariou A, Maxime V, Azoulay E, D'honneur G et al. (2010). "Corticosteroid treatment and intensive insulin therapy for septic shock in adults: a randomized controlled trial.". JAMA 303 (4): 341-8. DOI:10.1001/jama.2010.2. PMID 20103758. Research Blogging.

- ↑ 45.0 45.1 Mahon J, Laupacis A, Donner A, Wood T (1996). "Randomised study of n of 1 trials versus standard practice". BMJ 312 (7038): 1069–74. PMID 8616414. [e]

- ↑ Guyatt G et al (1988). "A clinician's guide for conducting randomized trials in individual patients". CMAJ 139: 497–503. PMID 3409138. [e]

- ↑ Brookes ST et al. (2007). ""Me's me and you's you": Exploring patients' perspectives of single patient (n-of-1) trials in the UK". Trials 8: 10. DOI:10.1186/1745-6215-8-10. PMID 17371593. Research Blogging.

- ↑ Langer JC et al. (1993). "The single-subject randomized trial. A useful clinical tool for assessing therapeutic efficacy in pediatric practice". Clinical Pediatrics 32: 654–7. PMID 8299295. [e]

- ↑ World Medical Association. Declaration of Helsinki: Ethical Principles for Medical Research Involving Human Subjects. Retrieved on 2007-11-17.

- ↑ (1997) "World Medical Association declaration of Helsinki. Recommendations guiding physicians in biomedical research involving human subjects". JAMA 277: 925–6. PMID 9062334. [e]

- ↑ Michels KB, Rothman KJ (2003). "Update on unethical use of placebos in randomised trials". Bioethics 17: 188–204. PMID 12812185. [e]

- ↑ 52.0 52.1 52.2 52.3 Temple R, Ellenberg SS (2000). "Placebo-controlled trials and active-control trials in the evaluation of new treatments. Part 1: ethical and scientific issues". Ann Intern Med 133: 455–63. PMID 10975964. [e]

- ↑ 53.0 53.1 53.2 Ellenberg SS, Temple R (2000). "Placebo-controlled trials and active-control trials in the evaluation of new treatments. Part 2: practical issues and specific cases". Ann Intern Med 133: 464–70. PMID 10975965. [e]

- ↑ 54.0 54.1 54.2 Kaul S, Diamond GA (2006). "Good enough: a primer on the analysis and interpretation of noninferiority trials". Ann Intern Med 145: 62–9. PMID 16818930. [e]

- ↑ 55.0 55.1 55.2 Piaggio G, Elbourne DR, Altman DG, Pocock SJ, Evans SJ (2006). "Reporting of noninferiority and equivalence randomized trials: an extension of the CONSORT statement". JAMA 295 (10): 1152–60. DOI:10.1001/jama.295.10.1152. PMID 16522836. Research Blogging.

- ↑ Zwarenstein M, Treweek S, Gagnier JJ, et al (2008). "Improving the reporting of pragmatic trials: an extension of the CONSORT statement". BMJ 337: a2390. PMID 19001484. [e]

- ↑ Thomas KJ, MacPherson H, Thorpe L, et al (September 2006). "Randomised controlled trial of a short course of traditional acupuncture compared with usual care for persistent non-specific low back pain". BMJ 333 (7569): 623. DOI:10.1136/bmj.38878.907361.7C. PMID 16980316. PMC 1570824. Research Blogging.

- ↑ (August 1991) "Beneficial effect of carotid endarterectomy in symptomatic patients with high-grade carotid stenosis. North American Symptomatic Carotid Endarterectomy Trial Collaborators". N. Engl. J. Med. 325 (7): 445–53. PMID 1852179. [e]

- ↑ Relton, Clare; David Torgerson, Alicia O'Cathain, Jon Nicholl (2010-03-24). "Rethinking pragmatic randomised controlled trials: introducing the "cohort multiple randomised controlled trial" design". BMJ 340 (mar19_1): c1066. DOI:10.1136/bmj.c1066. Retrieved on 2010-03-25. Research Blogging.

- ↑ The Belmont Report. U.S. Department of Health & Human Services

- ↑ The Belmont Report. National Institutes of Health

- ↑ Lavery, James V. (2011-03-08). "How Can Institutional Review Boards Best Interpret Preclinical Data?". PLoS Med 8 (3): e1001011. DOI:10.1371/journal.pmed.1001011. Retrieved on 2011-03-10. Research Blogging.

- ↑ 63.0 63.1 Emanuel EJ, Miller FG (2001). "The ethics of placebo-controlled trials--a middle ground". N. Engl. J. Med. 345 (12): 915–9. PMID 11565527. [e]

- ↑ Hausler, Thomas (2008-05-01). "In retrospect: When business became biology's plague". Nature 453 (7191): 38. DOI:10.1038/453038a. ISSN 0028-0836. Retrieved on 2008-11-12. Research Blogging.

- ↑ Pastor, John (2008-06-26). "The ethical basis of the null hypothesis". Nature 453 (7199): 1177. DOI:10.1038/4531177b. ISSN 0028-0836. Retrieved on 2008-11-12. Research Blogging.

- ↑ Rothman KJ, Michels KB (1994). "The continuing unethical use of placebo controls". N. Engl. J. Med. 331 (6): 394–8. PMID 8028622. [e]

- ↑ Djulbegovic B, Lacevic M, Cantor A, et al (2000). "The uncertainty principle and industry-sponsored research". Lancet 356 (9230): 635–8. PMID 10968436. [e]

- ↑ Cobb LA, Thomas GI, Dillard DH, Merendino KA, Bruce RA: An evaluation of internal-mammary-artery ligation by a double-blind technique. N Engl J Med 1959;260:1115-1118.

- ↑ Tenery R, Rakatansky H, Riddick FA, et al (2002). "Surgical "placebo" controls". Ann. Surg. 235 (2): 303–7. PMID 11807373. [e]

- ↑ 70.0 70.1 70.2 Montori VM, Devereaux PJ, Adhikari NK, et al (November 2005). "Randomized trials stopped early for benefit: a systematic review". JAMA 294 (17): 2203–9. DOI:10.1001/jama.294.17.2203. PMID 16264162. Research Blogging.

- ↑ Bassler, Dirk; Matthias Briel, Victor M. Montori, Melanie Lane, Paul Glasziou, Qi Zhou, Diane Heels-Ansdell, Stephen D. Walter, Gordon H. Guyatt, and the STOPIT-2 Study Group (2010-03-24). "Stopping Randomized Trials Early for Benefit and Estimation of Treatment Effects: Systematic Review and Meta-regression Analysis". JAMA 303 (12): 1180-1187. DOI:10.1001/jama.2010.310. Retrieved on 2010-03-25. Research Blogging.

- ↑ Trotta, F., G. Apolone, S. Garattini, and G. Tafuri. 2008. Stopping a trial early in oncology: for patients or for industry? Ann Oncol mdn042. http://dx.doi.org/10.1093/annonc/mdn042

- ↑ Slutsky AS, Lavery JV (March 2004). "Data Safety and Monitoring Boards". N. Engl. J. Med. 350 (11): 1143–7. DOI:10.1056/NEJMsb033476. PMID 15014189. Research Blogging.

- ↑ Borer JS, Gordon DJ, Geller NL (April 2008). "When should data and safety monitoring committees share interim results in cardiovascular trials?". JAMA 299 (14): 1710–2. DOI:10.1001/jama.299.14.1710. PMID 18398083. Research Blogging.

- ↑ 75.0 75.1 Bassler D, Montori VM, Briel M, Glasziou P, Guyatt G (March 2008). "Early stopping of randomized clinical trials for overt efficacy is problematic". J Clin Epidemiol 61 (3): 241–6. DOI:10.1016/j.jclinepi.2007.07.016. PMID 18226746. Research Blogging.

- ↑ 76.0 76.1 Pocock SJ (November 2005). "When (not) to stop a clinical trial for benefit". JAMA 294 (17): 2228–30. DOI:10.1001/jama.294.17.2228. PMID 16264167. Research Blogging.

- ↑ 77.0 77.1 77.2 77.3 Schulz KF, Grimes DA (2005). "Multiplicity in randomised trials II: subgroup and interim analyses". Lancet 365 (9471): 1657–61. DOI:10.1016/S0140-6736(05)66516-6. PMID 15885299. Research Blogging.

- ↑ Grant A (September 2004). "Stopping clinical trials early". BMJ 329 (7465): 525–6. DOI:10.1136/bmj.329.7465.525. PMID 15345605. Research Blogging.

- ↑ O'Brien PC, Fleming TR (September 1979). "A multiple testing procedure for clinical trials". Biometrics 35 (3): 549–56. PMID 497341. [e]

- ↑ Bauer P, Köhne K (December 1994). "Evaluation of experiments with adaptive interim analyses". Biometrics 50 (4): 1029–41. PMID 7786985. [e] This method was used by PMID: 18184958

- ↑ DeMets, David L.; Susan S. Ellenberg; Fleming, Thomas J. (2002). Data Monitoring Committees in Clinical Trials: a Practical Perspective. New York: J. Wiley & Sons. ISBN 0-471-48986-7.

- ↑ 82.0 82.1 Pocock SJ, Hughes MD (December 1989). "Practical problems in interim analyses, with particular regard to estimation". Control Clin Trials 10 (4 Suppl): 209S–221S. PMID 2605969. [e]

- ↑ Bassler, Dirk; Matthias Briel, Victor M. Montori, Melanie Lane, Paul Glasziou, Qi Zhou, Diane Heels-Ansdell, Stephen D. Walter, Gordon H. Guyatt, and the STOPIT-2 Study Group (2010-03-24). "Stopping Randomized Trials Early for Benefit and Estimation of Treatment Effects: Systematic Review and Meta-regression Analysis". JAMA 303 (12): 1180-1187. DOI:10.1001/jama.2010.310. Retrieved on 2010-03-25. Research Blogging.

- ↑ Pocock S, Wang D, Wilhelmsen L, Hennekens CH (May 2005). "The data monitoring experience in the Candesartan in Heart Failure Assessment of Reduction in Mortality and morbidity (CHARM) program". Am. Heart J. 149 (5): 939–43. DOI:10.1016/j.ahj.2004.10.038. PMID 15894981. Research Blogging.

- ↑ Wheatley K, Clayton D (February 2003). "Be skeptical about unexpected large apparent treatment effects: the case of an MRC AML12 randomization". Control Clin Trials 24 (1): 66–70. PMID 12559643. [e]

- ↑ Abraham E, Reinhart K, Opal S, et al (July 2003). "Efficacy and safety of tifacogin (recombinant tissue factor pathway inhibitor) in severe sepsis: a randomized controlled trial". JAMA 290 (2): 238–47. DOI:10.1001/jama.290.2.238. PMID 12851279. Research Blogging.

- ↑ Hughes MD, Pocock SJ (December 1988). "Stopping rules and estimation problems in clinical trials". Stat Med 7 (12): 1231–42. PMID 3231947. [e]

- ↑ Goodman SN (June 2007). "Stopping at nothing? Some dilemmas of data monitoring in clinical trials". Ann. Intern. Med. 146 (12): 882–7. PMID 17577008. [e]

- ↑ (2005) Statistical Monitoring of Clinical Trials: Fundamentals for Investigators. Berlin: Springer. ISBN 0-387-27781-1.

- ↑ Lachin JM (October 2006). "Operating characteristics of sample size re-estimation with futility stopping based on conditional power". Stat Med 25 (19): 3348–65. DOI:10.1002/sim.2455. PMID 16345019. Research Blogging.

- ↑ Baum ML, Anish DS, Chalmers TC, Sacks HS, Smith H, Fagerstrom RM (1981). "A survey of clinical trials of antibiotic prophylaxis in colon surgery: evidence against further use of no-treatment controls.". N Engl J Med 305 (14): 795-9. DOI:10.1056/NEJM198110013051404. PMID 7266633. Research Blogging.

- ↑ Lau J, Antman EM, Jimenez-Silva J, Kupelnick B, Mosteller F, Chalmers TC (1992). "Cumulative meta-analysis of therapeutic trials for myocardial infarction.". N Engl J Med 327 (4): 248-54. DOI:10.1056/NEJM199207233270406. PMID 1614465. Research Blogging.

- ↑ Robinson KA, Goodman SN (2011). "A systematic examination of the citation of prior research in reports of randomized, controlled trials.". Ann Intern Med 154 (1): 50-5. DOI:10.1059/0003-4819-154-1-201101040-00007. PMID 21200038. Research Blogging.

- ↑ Lau J, Schmid CH, Chalmers TC (1995). "Cumulative meta-analysis of clinical trials builds evidence for exemplary medical care.". J Clin Epidemiol 48 (1): 45-57; discussion 59-60. PMID 7853047. [e]

- ↑ 95.0 95.1 Hill KP, Ross JS, Egilman DS, Krumholz HM (August 2008). "The ADVANTAGE seeding trial: a review of internal documents". Ann. Intern. Med. 149 (4): 251–8. PMID 18711155. [e]

- ↑ Krumholz SD, Egilman DS, Ross JS (2011). "Study of neurontin: titrate to effect, profile of safety (STEPS) trial: a narrative account of a gabapentin seeding trial.". Arch Intern Med 171 (12): 1100-7. DOI:10.1001/archinternmed.2011.241. PMID 21709111. Research Blogging.

- ↑ Wood, Lesley; Matthias Egger, Lise Lotte Gluud, Kenneth F Schulz, Peter Juni, Douglas G Altman, Christian Gluud, Richard M Martin, Anthony J G Wood, Jonathan A C Sterne (2008-03-15). "Empirical evidence of bias in treatment effect estimates in controlled trials with different interventions and outcomes: meta-epidemiological study". BMJ 336 (7644): 601-605. DOI:10.1136/bmj.39465.451748.AD. Retrieved on 2008-03-14. Research Blogging.

- ↑ Fleming TR (2011). "Addressing missing data in clinical trials.". Ann Intern Med 154 (2): 113-7. DOI:10.1059/0003-4819-154-2-201101180-00010. PMID 21242367. Research Blogging.

- ↑ Hauser WA, Rich SS, Annegers JF, Anderson VE (August 1990). "Seizure recurrence after a 1st unprovoked seizure: an extended follow-up". Neurology 40 (8): 1163–70. PMID 2381523. [e]

- ↑ Hollis S, Campbell F (September 1999). "What is meant by intention to treat analysis? Survey of published randomised controlled trials". BMJ 319 (7211): 670–4. PMID 10480822. PMC 28218. [e]

- ↑ Abraha, Iosief; Alessandro Montedori (2010-06-14). "Modified intention to treat reporting in randomised controlled trials: systematic review". BMJ 340 (jun14_1): c2697. DOI:10.1136/bmj.c2697. Retrieved on 2010-06-17. Research Blogging.

- ↑ Simpson SH, Eurich DT, Majumdar SR, Padwal RS, Tsuyuki RT, Varney J et al. (2006). "A meta-analysis of the association between adherence to drug therapy and mortality.". BMJ 333 (7557): 15. DOI:10.1136/bmj.38875.675486.55. PMID 16790458. PMC PMC1488752. Research Blogging. Review in: Evid Based Nurs. 2007 Jan;10(1):24 Review in: ACP J Club. 2006 Nov-Dec;145(3):80

- ↑ (1980) "Influence of adherence to treatment and response of cholesterol on mortality in the coronary drug project.". N Engl J Med 303 (18): 1038-41. PMID 6999345.

- ↑ Nüesch E, Trelle S, Reichenbach S, Rutjes AW, Bürgi E, Scherer M et al. (2009). "The effects of excluding patients from the analysis in randomised controlled trials: meta-epidemiological study.". BMJ 339: b3244. DOI:10.1136/bmj.b3244. PMID 19736281. PMC PMC2739282. Research Blogging.

- ↑ Sussman, Jeremy B; Rodney A Hayward (2010-05-04). "An IV for the RCT: using instrumental variables to adjust for treatment contamination in randomised controlled trials". BMJ 340 (may04_2): c2073. DOI:10.1136/bmj.c2073. Retrieved on 2010-05-06. Research Blogging.

- ↑ Li Z, Chines AA, Meredith MP (2004). "Statistical validation of surrogate endpoints: is bone density a valid surrogate for fracture?". J Musculoskelet Neuronal Interact 4 (1): 64–74. DOI:10.1081/BIP-120024209. PMID 15615079. Research Blogging.

- ↑ Li Z, Meredith MP (2003). "Exploring the relationship between surrogates and clinical outcomes: analysis of individual patient data vs. meta-regression on group-level summary statistics". J Biopharm Stat 13 (4): 777–92. DOI:10.1081/BIP-120024209. PMID 14584722. Research Blogging.

- ↑ 108.0 108.1 Fleming TR, DeMets DL (1996). "Surrogate end points in clinical trials: are we being misled?". Ann. Intern. Med. 125 (7): 605–13. PMID 8815760. [e]

- ↑ la Cour JL, Brok J, Gøtzsche PC (2010). "Inconsistent reporting of surrogate outcomes in randomised clinical trials: cohort study.". BMJ 341: c3653. DOI:10.1136/bmj.c3653. PMID 20719823. PMC PMC2923691. Research Blogging.

- ↑ Cordoba G, Schwartz L, Woloshin S, Bae H, Gøtzsche PC (2010). "Definition, reporting, and interpretation of composite outcomes in clinical trials: systematic review.". BMJ 341: c3920. DOI:10.1136/bmj.c3920. PMID 20719825. PMC PMC2923692. Research Blogging.

- ↑ Ferreira-González I, Busse JW, Heels-Ansdell D, et al (April 2007). "Problems with use of composite end points in cardiovascular trials: systematic review of randomised controlled trials". BMJ 334 (7597): 786. DOI:10.1136/bmj.39136.682083.AE. PMID 17403713. PMC 1852019. Research Blogging.

- ↑ Freemantle N, Calvert M, Wood J, Eastaugh J, Griffin C (May 2003). "Composite outcomes in randomized trials: greater precision but with greater uncertainty?". JAMA 289 (19): 2554–9. DOI:10.1001/jama.289.19.2554. PMID 12759327. Research Blogging.

- ↑ Lim E, Brown A, Helmy A, Mussa S, Altman DG (November 2008). "Composite outcomes in cardiovascular research: a survey of randomized trials". Ann. Intern. Med. 149 (9): 612–7. PMID 18981486. [e]

- ↑ Marcus PM, Bergstralh EJ, Zweig MH, Harris A, Offord KP, Fontana RS (June 2006). "Extended lung cancer incidence follow-up in the Mayo Lung Project and overdiagnosis". J. Natl. Cancer Inst. 98 (11): 748–56. DOI:10.1093/jnci/djj207. PMID 16757699. Research Blogging.

- ↑ Chan AW, Hróbjartsson A, Jørgensen KJ, Gøtzsche PC, Altman DG (2008). "Discrepancies in sample size calculations and data analyses reported in randomised trials: comparison of publications with protocols". BMJ 337: a2299. PMID 19056791. [e]

- ↑ Charles, Pierre; Bruno Giraudeau, Agnes Dechartres, Gabriel Baron, Philippe Ravaud (2009-05-12). "Reporting of sample size calculation in randomised controlled trials: review". BMJ 338 (may12_1): b1732. DOI:10.1136/bmj.b1732. Retrieved on 2009-05-13. Research Blogging.

- ↑ Wang R, Lagakos SW, Ware JH, Hunter DJ, Drazen JM (2007). "Statistics in medicine--reporting of subgroup analyses in clinical trials". N. Engl. J. Med. 357 (21): 2189–94. DOI:10.1056/NEJMsr077003. PMID 18032770. Research Blogging.

- ↑ Yusuf S, Wittes J, Probstfield J, Tyroler HA (1991). "Analysis and interpretation of treatment effects in subgroups of patients in randomized clinical trials". JAMA 266 (1): 93–8. PMID 2046134. [e]

- ↑ Kent DM, Lindenauer PK (2010). "Aggregating and disaggregating patients in clinical trials and their subgroup analyses.". Ann Intern Med 153 (1): 51-2. DOI:10.1059/0003-4819-153-1-201007060-00012. PMID 20621903. Research Blogging.

- ↑ Altman DG, Bland JM (2003). "Interaction revisited: the difference between two estimates.". BMJ 326 (7382): 219. PMID 12543843. PMC PMC1125071.

- ↑ 121.0 121.1 121.2 Sun X, Briel M, Walter SD, Guyatt GH (2010). "Is a subgroup effect believable? Updating criteria to evaluate the credibility of subgroup analyses.". BMJ 340: c117. DOI:10.1136/bmj.c117. PMID 20354011. Research Blogging.

- ↑ Moher D, Jadad AR, Nichol G, Penman M, Tugwell P, Walsh S (1995). "Assessing the quality of randomized controlled trials: an annotated bibliography of scales and checklists.". Control Clin Trials 16 (1): 62-73. PMID 7743790.

- ↑ 123.0 123.1 Hartling L, Ospina M, Liang Y, Dryden DM, Hooton N, Krebs Seida J et al. (2009). "Risk of bias versus quality assessment of randomised controlled trials: cross sectional study.". BMJ 339: b4012. DOI:10.1136/bmj.b4012. PMID 19841007. Research Blogging.

- ↑ Higgins JPT, Green S (editors). Table 8.5.a: The Cochrane Collaboration's Tool for assessing risk of bias. Cochrane Handbook for Systematic Reviews of Interventions Version 5.0.1 [updated September 2008]. The Cochrane Collaboration, 2008. Available from www.cochrane-handbook.org.

- ↑ Bangalore S, Wetterslev J, Pranesh S, Sawhney S, Gluud C, Messerli FH (2008). "Perioperative beta blockers in patients having non-cardiac surgery: a meta-analysis.". Lancet 372 (9654): 1962-76. DOI:10.1016/S0140-6736(08)61560-3. PMID 19012955. Research Blogging. Review in: Ann Intern Med. 2009 Mar 17;150(6):JC3-4

- ↑ Jadad AR, Moore RA, Carroll D, et al (1996). "Assessing the quality of reports of randomized clinical trials: is blinding necessary?". Control Clin Trials 17 (1): 1–12. DOI:10.1016/0197-2456(95)00134-4. PMID 8721797. Research Blogging.

- ↑ Schulz KF, Chalmers I, Hayes RJ, Altman DG (1995). "Empirical evidence of bias. Dimensions of methodological quality associated with estimates of treatment effects in controlled trials.". JAMA 273 (5): 408-12. DOI:10.1001/jama.1995.03520290060030. PMID 7823387. Research Blogging.

- ↑ Jefferson T, Di Pietrantonj C, Debalini MG, Rivetti A, Demicheli V (2009). "Relation of study quality, concordance, take home message, funding, and impact in studies of influenza vaccines: systematic review.". BMJ 338: b354. DOI:10.1136/bmj.b354. PMID 19213766. PMC PMC2643439. Research Blogging.

- ↑ Ross, Joseph S.; Gregory K. Mulvey, Elizabeth M. Hines, Steven E. Nissen, Harlan M. Krumholz (2009). "Trial Publication after Registration in ClinicalTrials.Gov: A Cross-Sectional Analysis". PLoS Med 6 (9): e1000144. DOI:10.1371/journal.pmed.1000144. Retrieved on 2009-09-10. Research Blogging.

- ↑ 130.0 130.1 Dekkers, O M; E von Elm, A Algra, J A Romijn, J P Vandenbroucke (2009-04-17). "How to assess the external validity of therapeutic trials: a conceptual approach". Int. J. Epidemiol.: dyp174. DOI:10.1093/ije/dyp174. Retrieved on 2009-04-18. Research Blogging.